mirror of

https://github.com/amithkoujalgi/ollama4j.git

synced 2025-10-27 22:50:41 +01:00

Compare commits

62 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

b456feda64 | ||

|

|

1c1452836d | ||

|

|

1bca07ecb8 | ||

|

|

47c5943137 | ||

|

|

3061b2d8ef | ||

|

|

531f063cc9 | ||

|

|

887a9e1bfc | ||

|

|

10e2a606b5 | ||

|

|

d589315d23 | ||

|

|

95cc2164d3 | ||

|

|

970d54bcb5 | ||

|

|

e326936d3d | ||

|

|

9b916480b2 | ||

|

|

83d292671a | ||

|

|

dcf2a0fdb6 | ||

|

|

1ba0f02af6 | ||

|

|

74e6777b7c | ||

|

|

849ae77712 | ||

|

|

fcd0fbe4b3 | ||

|

|

066df6b369 | ||

|

|

1fbfe8a18c | ||

|

|

23785bb5b7 | ||

|

|

00cd2a0adf | ||

|

|

49fac9d2cf | ||

|

|

ca1a73fa76 | ||

|

|

adbf6a8185 | ||

|

|

57adaafb42 | ||

|

|

ad03c784e5 | ||

|

|

2e245a0e16 | ||

|

|

7c0c4e38ed | ||

|

|

a0c1184e7b | ||

|

|

8c485edb32 | ||

|

|

7ce89a3e89 | ||

|

|

fe43e87e1a | ||

|

|

f49c6d162a | ||

|

|

751f2585b4 | ||

|

|

a4edcf4e43 | ||

|

|

d55d1c0fd9 | ||

|

|

f0e5a9e172 | ||

|

|

866c08f590 | ||

|

|

bec634dd37 | ||

|

|

cbf65fef48 | ||

|

|

eee8fe5755 | ||

|

|

a7a030b9b0 | ||

|

|

e793320f7c | ||

|

|

4c3cf3b335 | ||

|

|

ecfbc1b394 | ||

|

|

ceb1e6f338 | ||

|

|

6df57c4a23 | ||

|

|

da6d20d118 | ||

|

|

64c629775a | ||

|

|

46f2d62fed | ||

|

|

b91943066e | ||

|

|

58d73637bb | ||

|

|

0ffaac65d4 | ||

|

|

4ce9c4c191 | ||

|

|

4681b1986f | ||

|

|

89d42fd469 | ||

|

|

8a903f695e | ||

|

|

3a20af25f1 | ||

|

|

24046b6660 | ||

|

|

453112d09f |

4

.github/workflows/build-on-pull-request.yml

vendored

4

.github/workflows/build-on-pull-request.yml

vendored

@@ -8,6 +8,8 @@ on:

|

||||

paths:

|

||||

- 'src/**'

|

||||

- 'pom.xml'

|

||||

# Allows you to run this workflow manually from the Actions tab

|

||||

workflow_dispatch:

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.event.pull_request.number || github.ref }}

|

||||

@@ -52,7 +54,7 @@ jobs:

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- name: Use Node.js

|

||||

uses: actions/setup-node@v5

|

||||

uses: actions/setup-node@v6

|

||||

with:

|

||||

node-version: '20.x'

|

||||

- run: cd docs && npm ci

|

||||

|

||||

6

.github/workflows/codeql.yml

vendored

6

.github/workflows/codeql.yml

vendored

@@ -32,13 +32,13 @@ jobs:

|

||||

java-version: '21'

|

||||

|

||||

- name: Initialize CodeQL

|

||||

uses: github/codeql-action/init@v3

|

||||

uses: github/codeql-action/init@v4

|

||||

with:

|

||||

languages: ${{ matrix.language }}

|

||||

|

||||

- name: Autobuild

|

||||

uses: github/codeql-action/autobuild@v3

|

||||

uses: github/codeql-action/autobuild@v4

|

||||

|

||||

- name: Perform CodeQL Analysis

|

||||

uses: github/codeql-action/analyze@v3

|

||||

uses: github/codeql-action/analyze@v4

|

||||

|

||||

|

||||

4

.github/workflows/publish-docs.yml

vendored

4

.github/workflows/publish-docs.yml

vendored

@@ -40,7 +40,7 @@ jobs:

|

||||

|

||||

- uses: actions/checkout@v5

|

||||

- name: Use Node.js

|

||||

uses: actions/setup-node@v5

|

||||

uses: actions/setup-node@v6

|

||||

with:

|

||||

node-version: '20.x'

|

||||

- run: cd docs && npm ci

|

||||

@@ -54,7 +54,7 @@ jobs:

|

||||

regex: false

|

||||

|

||||

- name: Build with Maven

|

||||

run: mvn --file pom.xml -U clean package && cp -r ./target/apidocs/. ./docs/build/apidocs

|

||||

run: mvn --file pom.xml -U clean package -Dgpg.skip=true -DskipTests && cp -r ./target/apidocs/. ./docs/build/apidocs

|

||||

|

||||

- name: Doxygen Action

|

||||

uses: mattnotmitt/doxygen-action@v1.12.0

|

||||

|

||||

6

Makefile

6

Makefile

@@ -1,3 +1,7 @@

|

||||

# Default target

|

||||

.PHONY: all

|

||||

all: dev build

|

||||

|

||||

dev:

|

||||

@echo "Setting up dev environment..."

|

||||

@command -v pre-commit >/dev/null 2>&1 || { echo "Error: pre-commit is not installed. Please install it first."; exit 1; }

|

||||

@@ -43,7 +47,7 @@ doxygen:

|

||||

@doxygen Doxyfile

|

||||

|

||||

javadoc:

|

||||

@echo "\033[0;34mGenerating Javadocs into '$(javadocfolder)'...\033[0m"

|

||||

@echo "\033[0;34mGenerating Javadocs...\033[0m"

|

||||

@mvn clean javadoc:javadoc

|

||||

@if [ -f "target/reports/apidocs/index.html" ]; then \

|

||||

echo "\033[0;32mJavadocs generated in target/reports/apidocs/index.html\033[0m"; \

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

<div align="center">

|

||||

<img src='https://raw.githubusercontent.com/ollama4j/ollama4j/65a9d526150da8fcd98e2af6a164f055572bf722/ollama4j.jpeg' width='100' alt="ollama4j-icon">

|

||||

<img src='https://raw.githubusercontent.com/ollama4j/ollama4j/refs/heads/main/ollama4j-new.jpeg' width='200' alt="ollama4j-icon">

|

||||

|

||||

### Ollama4j

|

||||

|

||||

|

||||

95

docs/docs/agent.md

Normal file

95

docs/docs/agent.md

Normal file

@@ -0,0 +1,95 @@

|

||||

---

|

||||

sidebar_position: 4

|

||||

|

||||

title: Agents 🆕

|

||||

---

|

||||

|

||||

import CodeEmbed from '@site/src/components/CodeEmbed';

|

||||

import TypewriterTextarea from '@site/src/components/TypewriterTextarea';

|

||||

|

||||

# Agents

|

||||

|

||||

An **agent** is an intelligent assistant that understands user requests, communicates using LLMs, and performs actions by invoking appropriate tools (exposed as code).

|

||||

|

||||

With agents, you can:

|

||||

- Orchestrate multi-step reasoning and tool use (e.g., answering questions, looking up data, making reservations, sending emails, and more)

|

||||

- Automatically select and execute the right tools or actions based on user intent

|

||||

- Maintain conversation context to support dynamic, interactive problem solving

|

||||

- Adapt behavior, persona, or expertise by simply changing configuration—without changing your Java code

|

||||

|

||||

Agents help by acting as an intelligent bridge between users, LLMs, and your application's capabilities. They can automate tasks, provide personalized assistance, and extend what LLMs can do by calling your Java methods or integrating with external systems.

|

||||

|

||||

With Ollama4j, creating an agent is as simple as describing its purpose, available tools, behavior, and preferred language model—all defined in a single YAML file.

|

||||

|

||||

**Why consider building agents using Ollama4j?**

|

||||

|

||||

- **Seamless Customization:** Effortlessly fine-tune your agent's personality, expertise, or workflow by editing the YAML—no need to recompile or modify your Java code.

|

||||

- **Plug-and-Play Extensibility:** Add new tools or swap out existing logic classes without wrestling with framework internals or glue code.

|

||||

- **Rapid Iteration:** Experiment freely. Try different models, instructions, and toolsets to try new behaviors or orchestrations in minutes.

|

||||

- **Clear Separation of Concerns:** Keep your core business logic (Java) and conversational configuration (YAML) distinct, promoting clarity, maintainability, and collaboration.

|

||||

|

||||

---

|

||||

|

||||

### Define an Agent in YAML

|

||||

|

||||

Specify everything about your agent—what LLM it uses, its “personality,” and all callable tools—in a single YAML file.

|

||||

|

||||

**Agent configuration parameters:**

|

||||

|

||||

| Field | Description |

|

||||

|-------------------------|------------------------------------------------------------------------------------------------|

|

||||

| `name` | Name of your agent. |

|

||||

| `host` | The base URL for your Ollama server (e.g., `http://localhost:11434`). |

|

||||

| `model` | The LLM backing your agent (e.g., `llama3`, `gemma`, `mistral`, etc). |

|

||||

| `customPrompt` | _(optional)_ System prompt—instructions or persona for your agent. |

|

||||

| `tools` | List of tools the agent can use. Each tool entry describes the name, function, and parameters. |

|

||||

| `toolFunctionFQCN` | Fully qualified Java class name implementing the tool logic. Must be present on classpath. |

|

||||

| `requestTimeoutSeconds` | _(optional)_ How long (seconds) to wait for agent replies. |

|

||||

|

||||

YAML makes it effortless to configure and tweak your agent’s powers and behavior—no code changes needed!

|

||||

|

||||

**Example agent YAML:**

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/resources/agent.yaml" language='yaml'/>

|

||||

|

||||

---

|

||||

|

||||

### Implement Tool Functions

|

||||

|

||||

Your agent calls out to Java classes (Tool Functions). Put these implementations on your classpath, register them in YAML.

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/toolfunctions/HotelBookingLookupToolFunction.java"/>

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/toolfunctions/HotelBookingToolFunction.java"/>

|

||||

|

||||

---

|

||||

|

||||

### Instantiating and Running Agents

|

||||

|

||||

Once your agent is described in YAML, bringing it to life in Java takes only a couple of lines:

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/AgentExample.java"/>

|

||||

|

||||

The API takes care of wiring up LLMs, tool invocation, and instruction handling.

|

||||

|

||||

Here's a sample interaction:

|

||||

|

||||

<TypewriterTextarea

|

||||

textContent='[You]: Book a hotel in Mysuru for two guests, from July 20 to July 22.

|

||||

Alright, I have booked the hotel! Room number 10 booked for 2 guests in Mysuru from July 20th to July 22nd. Here is your booking ID: HB-123'

|

||||

typingSpeed={30}

|

||||

pauseBetweenSentences={1200}

|

||||

height='110px'

|

||||

width='100%'

|

||||

/>

|

||||

|

||||

Here's another one:

|

||||

|

||||

<TypewriterTextarea

|

||||

textContent='[You]: Give me details of booking ID - HB-123.

|

||||

I found a booking for HB-123. Looks like the hotel is booked for 2 guests. Enjoy your stay!'

|

||||

typingSpeed={30}

|

||||

pauseBetweenSentences={1200}

|

||||

height='90px'

|

||||

width='100%'

|

||||

/>

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"label": "APIs - Extras",

|

||||

"position": 4,

|

||||

"label": "Extras",

|

||||

"position": 5,

|

||||

"link": {

|

||||

"type": "generated-index",

|

||||

"description": "Details of APIs to handle bunch of extra stuff."

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

{

|

||||

"label": "APIs - Generate",

|

||||

"label": "Generate",

|

||||

"position": 3,

|

||||

"link": {

|

||||

"type": "generated-index",

|

||||

|

||||

@@ -66,11 +66,11 @@ To use a method as a tool within a chat call, follow these steps:

|

||||

Let's try an example. Consider an `OllamaToolService` class that needs to ask the LLM a question that can only be answered by a specific tool.

|

||||

This tool is implemented within a `GlobalConstantGenerator` class. Following is the code that exposes an annotated method as a tool:

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/toolcalling/annotated/GlobalConstantGenerator.java"/>

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/annotated/GlobalConstantGenerator.java"/>

|

||||

|

||||

The annotated method can then be used as a tool in the chat session:

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/toolcalling/annotated/AnnotatedToolCallingExample.java"/>

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/AnnotatedToolCallingExample.java"/>

|

||||

|

||||

Running the above would produce a response similar to:

|

||||

|

||||

|

||||

@@ -63,7 +63,7 @@ You will get a response similar to:

|

||||

|

||||

### Using a simple Console Output Stream Handler

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/ConsoleOutputStreamHandlerExample.java" />

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/ChatWithConsoleHandlerExample.java" />

|

||||

|

||||

### With a Stream Handler to receive the tokens as they are generated

|

||||

|

||||

|

||||

@@ -19,11 +19,11 @@ You can use this feature to receive both the thinking and the response as separa

|

||||

You will get a response similar to:

|

||||

|

||||

:::tip[Thinking Tokens]

|

||||

User asks "Who are you?" It's a request for identity. As ChatGPT, we should explain that I'm an AI developed by OpenAI, etc. Provide friendly explanation.

|

||||

USER ASKS "WHO ARE YOU?" IT'S A REQUEST FOR IDENTITY. AS CHATGPT, WE SHOULD EXPLAIN THAT I'M AN AI DEVELOPED BY OPENAI, ETC. PROVIDE FRIENDLY EXPLANATION.

|

||||

:::

|

||||

|

||||

:::tip[Response Tokens]

|

||||

I’m ChatGPT, a large language model created by OpenAI. I’m designed to understand and generate natural‑language text, so I can answer questions, help with writing, explain concepts, brainstorm ideas, and chat about almost any topic. I don’t have a personal life or consciousness—I’m a tool that processes input and produces responses based on patterns in the data I was trained on. If you have any questions about how I work or what I can do, feel free to ask!

|

||||

i’m chatgpt, a large language model created by openai. i’m designed to understand and generate natural‑language text, so i can answer questions, help with writing, explain concepts, brainstorm ideas, and chat about almost any topic. i don’t have a personal life or consciousness—i’m a tool that processes input and produces responses based on patterns in the data i was trained on. if you have any questions about how i work or what i can do, feel free to ask!

|

||||

:::

|

||||

|

||||

### Generate response and receive the thinking and response tokens streamed

|

||||

@@ -34,7 +34,7 @@ You will get a response similar to:

|

||||

|

||||

:::tip[Thinking Tokens]

|

||||

<TypewriterTextarea

|

||||

textContent={`User asks "Who are you?" It's a request for identity. As ChatGPT, we should explain that I'm an AI developed by OpenAI, etc. Provide friendly explanation.`}

|

||||

textContent={`USER ASKS "WHO ARE YOU?" WE SHOULD EXPLAIN THAT I'M AN AI BY OPENAI, ETC.`}

|

||||

typingSpeed={10}

|

||||

pauseBetweenSentences={1200}

|

||||

height="auto"

|

||||

@@ -45,7 +45,7 @@ style={{ whiteSpace: 'pre-line' }}

|

||||

|

||||

:::tip[Response Tokens]

|

||||

<TypewriterTextarea

|

||||

textContent={`I’m ChatGPT, a large language model created by OpenAI. I’m designed to understand and generate natural‑language text, so I can answer questions, help with writing, explain concepts, brainstorm ideas, and chat about almost any topic. I don’t have a personal life or consciousness—I’m a tool that processes input and produces responses based on patterns in the data I was trained on. If you have any questions about how I work or what I can do, feel free to ask!`}

|

||||

textContent={`i’m chatgpt, a large language model created by openai.`}

|

||||

typingSpeed={10}

|

||||

pauseBetweenSentences={1200}

|

||||

height="auto"

|

||||

|

||||

@@ -3,6 +3,7 @@ sidebar_position: 4

|

||||

---

|

||||

|

||||

import CodeEmbed from '@site/src/components/CodeEmbed';

|

||||

import TypewriterTextarea from '@site/src/components/TypewriterTextarea';

|

||||

|

||||

# Generate with Images

|

||||

|

||||

@@ -17,13 +18,11 @@ recommended.

|

||||

|

||||

:::

|

||||

|

||||

## Synchronous mode

|

||||

|

||||

If you have this image downloaded and you pass the path to the downloaded image to the following code:

|

||||

|

||||

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/GenerateWithImageFile.java" />

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/GenerateWithImageFileSimple.java" />

|

||||

|

||||

You will get a response similar to:

|

||||

|

||||

@@ -32,30 +31,22 @@ This image features a white boat with brown cushions, where a dog is sitting on

|

||||

be enjoying its time outdoors, perhaps on a lake.

|

||||

:::

|

||||

|

||||

# Generate with Image URLs

|

||||

|

||||

This API lets you ask questions along with the image files to the LLMs.

|

||||

This API corresponds to

|

||||

the [completion](https://github.com/jmorganca/ollama/blob/main/docs/api.md#generate-a-completion) API.

|

||||

|

||||

:::note

|

||||

|

||||

Executing this on Ollama server running in CPU-mode will take longer to generate response. Hence, GPU-mode is

|

||||

recommended.

|

||||

|

||||

:::

|

||||

|

||||

## Ask (Sync)

|

||||

|

||||

Passing the link of this image the following code:

|

||||

If you want the response to be streamed, you can use the following code:

|

||||

|

||||

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/GenerateWithImageURL.java" />

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/GenerateWithImageFileStreaming.java" />

|

||||

|

||||

You will get a response similar to:

|

||||

|

||||

:::tip[LLM Response]

|

||||

This image features a white boat with brown cushions, where a dog is sitting on the back of the boat. The dog seems to

|

||||

be enjoying its time outdoors, perhaps on a lake.

|

||||

:::tip[Response Tokens]

|

||||

<TypewriterTextarea

|

||||

textContent={`This image features a white boat with brown cushions, where a dog is sitting on the back of the boat. The dog seems to be enjoying its time outdoors, perhaps on a lake.`}

|

||||

typingSpeed={10}

|

||||

pauseBetweenSentences={1200}

|

||||

height="auto"

|

||||

width="100%"

|

||||

style={{ whiteSpace: 'pre-line' }}

|

||||

/>

|

||||

:::

|

||||

@@ -36,19 +36,19 @@ We can create static functions as our tools.

|

||||

This function takes the arguments `location` and `fuelType` and performs an operation with these arguments and returns

|

||||

fuel price value.

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/toolcalling/tools/FuelPriceTool.java"/ >

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/toolfunctions/FuelPriceToolFunction.java"/ >

|

||||

|

||||

This function takes the argument `city` and performs an operation with the argument and returns the weather for a

|

||||

location.

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/toolcalling/tools/WeatherTool.java"/ >

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/toolfunctions/WeatherToolFunction.java"/ >

|

||||

|

||||

Another way to create our tools is by creating classes by extending `ToolFunction`.

|

||||

|

||||

This function takes the argument `employee-name` and performs an operation with the argument and returns employee

|

||||

details.

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/toolcalling/tools/DBQueryFunction.java"/ >

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/toolfunctions/EmployeeFinderToolFunction.java"/ >

|

||||

|

||||

### Define Tool Specifications

|

||||

|

||||

@@ -57,21 +57,21 @@ Lets define a sample tool specification called **Fuel Price Tool** for getting t

|

||||

- Specify the function `name`, `description`, and `required` properties (`location` and `fuelType`).

|

||||

- Associate the `getCurrentFuelPrice` function you defined earlier.

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/toolcalling/toolspecs/FuelPriceToolSpec.java"/ >

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/toolspecs/FuelPriceToolSpec.java"/ >

|

||||

|

||||

Lets also define a sample tool specification called **Weather Tool** for getting the current weather.

|

||||

|

||||

- Specify the function `name`, `description`, and `required` property (`city`).

|

||||

- Associate the `getCurrentWeather` function you defined earlier.

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/toolcalling/toolspecs/WeatherToolSpec.java"/ >

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/toolspecs/WeatherToolSpec.java"/ >

|

||||

|

||||

Lets also define a sample tool specification called **DBQueryFunction** for getting the employee details from database.

|

||||

|

||||

- Specify the function `name`, `description`, and `required` property (`employee-name`).

|

||||

- Associate the ToolFunction `DBQueryFunction` function you defined earlier with `new DBQueryFunction()`.

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/toolcalling/toolspecs/DatabaseQueryToolSpec.java"/ >

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/tools/toolspecs/EmployeeFinderToolSpec.java"/ >

|

||||

|

||||

Now put it all together by registering the tools and prompting with tools.

|

||||

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

{

|

||||

"label": "APIs - Manage Models",

|

||||

"label": "Manage Models",

|

||||

"position": 2,

|

||||

"link": {

|

||||

"type": "generated-index",

|

||||

|

||||

@@ -15,13 +15,13 @@ This API lets you create a custom model on the Ollama server.

|

||||

You would see these logs while the custom model is being created:

|

||||

|

||||

```

|

||||

{"status":"using existing layer sha256:fad2a06e4cc705c2fa8bec5477ddb00dc0c859ac184c34dcc5586663774161ca"}

|

||||

{"status":"using existing layer sha256:41c2cf8c272f6fb0080a97cd9d9bd7d4604072b80a0b10e7d65ca26ef5000c0c"}

|

||||

{"status":"using existing layer sha256:1da0581fd4ce92dcf5a66b1da737cf215d8dcf25aa1b98b44443aaf7173155f5"}

|

||||

{"status":"creating new layer sha256:941b69ca7dc2a85c053c38d9e8029c9df6224e545060954fa97587f87c044a64"}

|

||||

{"status":"using existing layer sha256:f02dd72bb2423204352eabc5637b44d79d17f109fdb510a7c51455892aa2d216"}

|

||||

{"status":"writing manifest"}

|

||||

{"status":"success"}

|

||||

using existing layer sha256:fad2a06e4cc705c2fa8bec5477ddb00dc0c859ac184c34dcc5586663774161ca

|

||||

using existing layer sha256:41c2cf8c272f6fb0080a97cd9d9bd7d4604072b80a0b10e7d65ca26ef5000c0c

|

||||

using existing layer sha256:1da0581fd4ce92dcf5a66b1da737cf215d8dcf25aa1b98b44443aaf7173155f5

|

||||

creating new layer sha256:941b69ca7dc2a85c053c38d9e8029c9df6224e545060954fa97587f87c044a64

|

||||

using existing layer sha256:f02dd72bb2423204352eabc5637b44d79d17f109fdb510a7c51455892aa2d216

|

||||

writing manifest

|

||||

success

|

||||

```

|

||||

Once created, you can see it when you use [list models](./list-models) API.

|

||||

|

||||

|

||||

90

docs/docs/metrics.md

Normal file

90

docs/docs/metrics.md

Normal file

@@ -0,0 +1,90 @@

|

||||

---

|

||||

sidebar_position: 6

|

||||

|

||||

title: Metrics 🆕

|

||||

---

|

||||

|

||||

import CodeEmbed from '@site/src/components/CodeEmbed';

|

||||

|

||||

# Metrics

|

||||

|

||||

:::warning[Note]

|

||||

This is work in progress

|

||||

:::

|

||||

|

||||

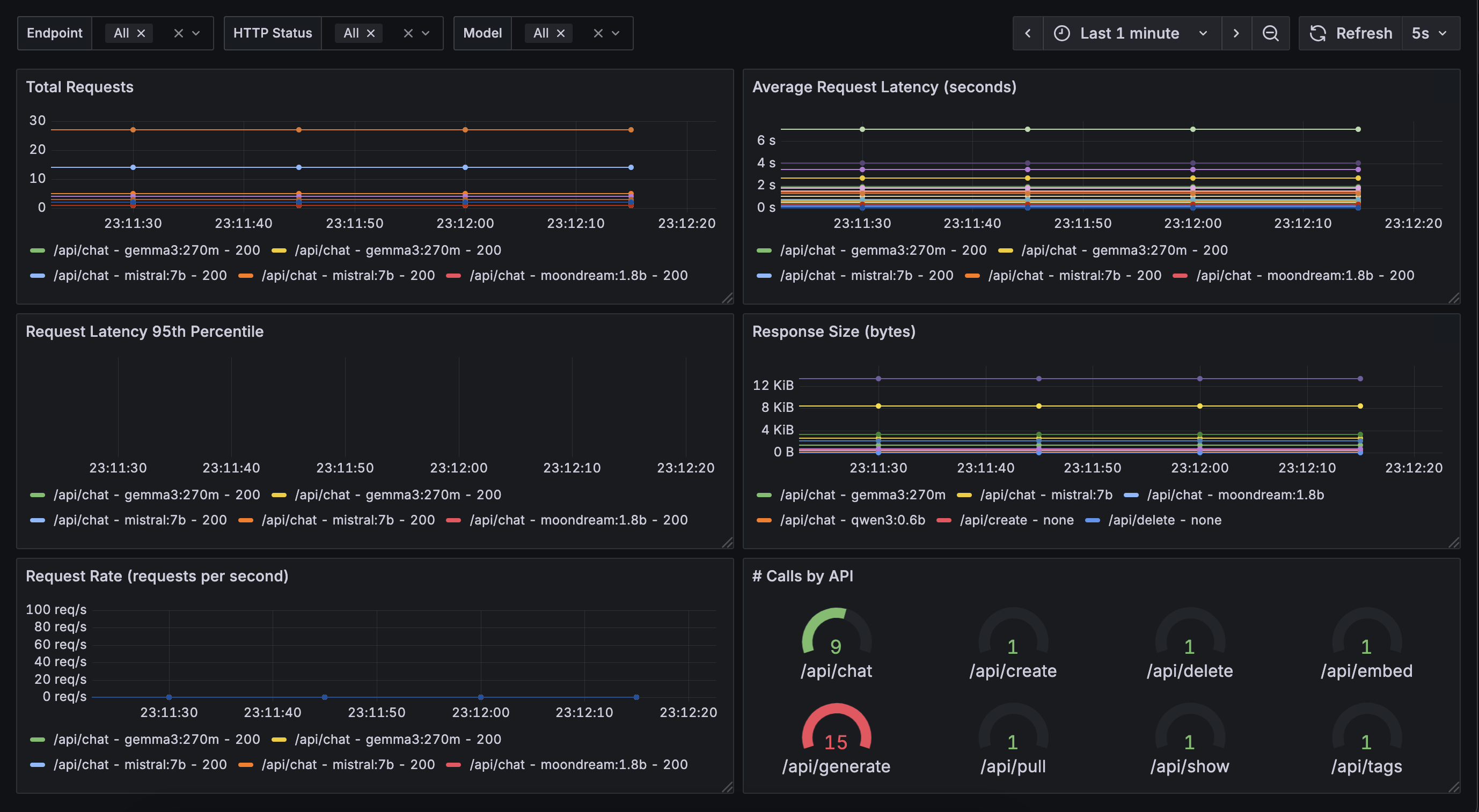

Monitoring and understanding the performance of your models and requests is crucial for optimizing and maintaining your

|

||||

applications. The Ollama4j library provides built-in support for collecting and exposing various metrics, such as

|

||||

request counts, response times, and error rates. These metrics can help you:

|

||||

|

||||

- Track usage patterns and identify bottlenecks

|

||||

- Monitor the health and reliability of your services

|

||||

- Set up alerts for abnormal behavior

|

||||

- Gain insights for scaling and optimization

|

||||

|

||||

## Available Metrics

|

||||

|

||||

Ollama4j exposes several key metrics, including:

|

||||

|

||||

- **Total Requests**: The number of requests processed by the model.

|

||||

- **Response Time**: The time taken to generate a response for each request.

|

||||

- **Error Rate**: The percentage of requests that resulted in errors.

|

||||

- **Active Sessions**: The number of concurrent sessions or users.

|

||||

|

||||

These metrics can be accessed programmatically or integrated with monitoring tools such as Prometheus or Grafana for

|

||||

visualization and alerting.

|

||||

|

||||

## Example Metrics Dashboard

|

||||

|

||||

Below is an example of a metrics dashboard visualizing some of these key statistics:

|

||||

|

||||

|

||||

|

||||

## Example: Accessing Metrics in Java

|

||||

|

||||

You can easily access and display metrics in your Java application using Ollama4j.

|

||||

|

||||

Make sure you have added the `simpleclient_httpserver` dependency in your app for the app to be able to expose the

|

||||

metrics via `/metrics` endpoint:

|

||||

|

||||

```xml

|

||||

|

||||

<dependency>

|

||||

<groupId>io.prometheus</groupId>

|

||||

<artifactId>simpleclient_httpserver</artifactId>

|

||||

<version>0.16.0</version>

|

||||

</dependency>

|

||||

```

|

||||

|

||||

Here is a sample code snippet demonstrating how to retrieve and print metrics on Grafana:

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/src/main/java/io/github/ollama4j/examples/MetricsExample.java" />

|

||||

|

||||

This will start a simple HTTP server with `/metrics` endpoint enabled. Metrics will now available

|

||||

at: http://localhost:8080/metrics

|

||||

|

||||

## Integrating with Monitoring Tools

|

||||

|

||||

### Grafana

|

||||

|

||||

Use the following sample `docker-compose` file to host a basic Grafana container.

|

||||

|

||||

<CodeEmbed src="https://raw.githubusercontent.com/ollama4j/ollama4j-examples/refs/heads/main/docker/docker-compose.yml" />

|

||||

|

||||

And run:

|

||||

|

||||

```shell

|

||||

docker-compose -f path/to/your/docker-compose.yml up

|

||||

```

|

||||

|

||||

This starts Granfana at http://localhost:3000

|

||||

|

||||

|

||||

[//]: # (To integrate Ollama4j metrics with external monitoring systems, you can export the metrics endpoint and configure your)

|

||||

|

||||

[//]: # (monitoring tool to scrape or collect the data. Refer to the [integration guide](../integration/monitoring.md) for)

|

||||

|

||||

[//]: # (detailed instructions.)

|

||||

|

||||

[//]: # ()

|

||||

|

||||

[//]: # (For more information on customizing and extending metrics, see the [API documentation](../api/metrics.md).)

|

||||

721

docs/package-lock.json

generated

721

docs/package-lock.json

generated

File diff suppressed because it is too large

Load Diff

@@ -15,23 +15,24 @@

|

||||

},

|

||||

"dependencies": {

|

||||

"@docsearch/js": "^4.1.0",

|

||||

"@docusaurus/core": "^3.9.0",

|

||||

"@docusaurus/plugin-google-gtag": "^3.9.1",

|

||||

"@docusaurus/preset-classic": "^3.9.1",

|

||||

"@docusaurus/theme-mermaid": "^3.9.1",

|

||||

"@docusaurus/core": "^3.9.2",

|

||||

"@docusaurus/plugin-google-gtag": "^3.9.2",

|

||||

"@docusaurus/preset-classic": "^3.9.2",

|

||||

"@docusaurus/theme-mermaid": "^3.9.2",

|

||||

"@docusaurus/plugin-content-docs": "^3.9.2",

|

||||

"@iconify/react": "^6.0.2",

|

||||

"@mdx-js/react": "^3.1.1",

|

||||

"clsx": "^2.1.1",

|

||||

"font-awesome": "^4.7.0",

|

||||

"prism-react-renderer": "^2.4.1",

|

||||

"react": "^19.1.1",

|

||||

"react-dom": "^19.1.1",

|

||||

"react": "^19.2.0",

|

||||

"react-dom": "^19.2.0",

|

||||

"react-icons": "^5.5.0",

|

||||

"react-image-gallery": "^1.4.0"

|

||||

},

|

||||

"devDependencies": {

|

||||

"@docusaurus/module-type-aliases": "^3.9.1",

|

||||

"@docusaurus/types": "^3.4.0"

|

||||

"@docusaurus/module-type-aliases": "^3.9.2",

|

||||

"@docusaurus/types": "^3.9.2"

|

||||

},

|

||||

"browserslist": {

|

||||

"production": [

|

||||

|

||||

@@ -1,84 +1,14 @@

|

||||

// import React, { useState, useEffect } from 'react';

|

||||

// import CodeBlock from '@theme/CodeBlock';

|

||||

// import Icon from '@site/src/components/Icon';

|

||||

|

||||

|

||||

// const CodeEmbed = ({ src }) => {

|

||||

// const [code, setCode] = useState('');

|

||||

// const [loading, setLoading] = useState(true);

|

||||

// const [error, setError] = useState(null);

|

||||

|

||||

// useEffect(() => {

|

||||

// let isMounted = true;

|

||||

|

||||

// const fetchCodeFromUrl = async (url) => {

|

||||

// if (!isMounted) return;

|

||||

|

||||

// setLoading(true);

|

||||

// setError(null);

|

||||

|

||||

// try {

|

||||

// const response = await fetch(url);

|

||||

// if (!response.ok) {

|

||||

// throw new Error(`HTTP error! status: ${response.status}`);

|

||||

// }

|

||||

// const data = await response.text();

|

||||

// if (isMounted) {

|

||||

// setCode(data);

|

||||

// }

|

||||

// } catch (err) {

|

||||

// console.error('Failed to fetch code:', err);

|

||||

// if (isMounted) {

|

||||

// setError(err);

|

||||

// setCode(`// Failed to load code from ${url}\n// ${err.message}`);

|

||||

// }

|

||||

// } finally {

|

||||

// if (isMounted) {

|

||||

// setLoading(false);

|

||||

// }

|

||||

// }

|

||||

// };

|

||||

|

||||

// if (src) {

|

||||

// fetchCodeFromUrl(src);

|

||||

// }

|

||||

|

||||

// return () => {

|

||||

// isMounted = false;

|

||||

// };

|

||||

// }, [src]);

|

||||

|

||||

// const githubUrl = src ? src.replace('https://raw.githubusercontent.com', 'https://github.com').replace('/refs/heads/', '/blob/') : null;

|

||||

// const fileName = src ? src.substring(src.lastIndexOf('/') + 1) : null;

|

||||

|

||||

// return (

|

||||

// loading ? (

|

||||

// <div>Loading code...</div>

|

||||

// ) : error ? (

|

||||

// <div>Error: {error.message}</div>

|

||||

// ) : (

|

||||

// <div style={{ backgroundColor: 'transparent', padding: '0px', borderRadius: '5px' }}>

|

||||

// <div style={{ textAlign: 'right' }}>

|

||||

// {githubUrl && (

|

||||

// <a href={githubUrl} target="_blank" rel="noopener noreferrer" style={{ paddingRight: '15px', color: 'gray', fontSize: '0.8em', fontStyle: 'italic', display: 'inline-flex', alignItems: 'center' }}>

|

||||

// View on GitHub

|

||||

// <Icon icon="mdi:github" height="48" />

|

||||

// </a>

|

||||

// )}

|

||||

// </div>

|

||||

// <CodeBlock title={fileName} className="language-java">{code}</CodeBlock>

|

||||

// </div>

|

||||

// )

|

||||

// );

|

||||

// };

|

||||

|

||||

// export default CodeEmbed;

|

||||

import React, { useState, useEffect } from 'react';

|

||||

import React, {useState, useEffect} from 'react';

|

||||

import CodeBlock from '@theme/CodeBlock';

|

||||

import Icon from '@site/src/components/Icon';

|

||||

|

||||

|

||||

const CodeEmbed = ({ src }) => {

|

||||

/**

|

||||

* CodeEmbed component to display code fetched from a URL in a CodeBlock.

|

||||

* @param {object} props

|

||||

* @param {string} props.src - Source URL to fetch the code from.

|

||||

* @param {string} [props.language='java'] - Language for syntax highlighting in CodeBlock.

|

||||

*/

|

||||

const CodeEmbed = ({src, language = 'java'}) => {

|

||||

const [code, setCode] = useState('');

|

||||

const [loading, setLoading] = useState(true);

|

||||

const [error, setError] = useState(null);

|

||||

@@ -127,7 +57,7 @@ const CodeEmbed = ({ src }) => {

|

||||

const fileName = src ? src.substring(src.lastIndexOf('/') + 1) : null;

|

||||

|

||||

const title = (

|

||||

<div style={{ display: 'flex', justifyContent: 'space-between', alignItems: 'center' }}>

|

||||

<div style={{display: 'flex', justifyContent: 'space-between', alignItems: 'center'}}>

|

||||

<a

|

||||

href={githubUrl}

|

||||

target="_blank"

|

||||

@@ -146,9 +76,15 @@ const CodeEmbed = ({ src }) => {

|

||||

<span>{fileName}</span>

|

||||

</a>

|

||||

{githubUrl && (

|

||||

<a href={githubUrl} target="_blank" rel="noopener noreferrer" style={{ color: 'gray', fontSize: '0.9em', fontStyle: 'italic', display: 'inline-flex', alignItems: 'center' }}>

|

||||

<a href={githubUrl} target="_blank" rel="noopener noreferrer" style={{

|

||||

color: 'gray',

|

||||

fontSize: '0.9em',

|

||||

fontStyle: 'italic',

|

||||

display: 'inline-flex',

|

||||

alignItems: 'center'

|

||||

}}>

|

||||

View on GitHub

|

||||

<Icon icon="mdi:github" height="1em" />

|

||||

<Icon icon="mdi:github" height="1em"/>

|

||||

</a>

|

||||

)}

|

||||

</div>

|

||||

@@ -160,8 +96,8 @@ const CodeEmbed = ({ src }) => {

|

||||

) : error ? (

|

||||

<div>Error: {error.message}</div>

|

||||

) : (

|

||||

<div style={{ backgroundColor: 'transparent', padding: '0px', borderRadius: '5px' }}>

|

||||

<CodeBlock title={title} className="language-java">{code}</CodeBlock>

|

||||

<div style={{backgroundColor: 'transparent', padding: '0px', borderRadius: '5px'}}>

|

||||

<CodeBlock title={title} language={language}>{code}</CodeBlock>

|

||||

</div>

|

||||

)

|

||||

);

|

||||

|

||||

BIN

ollama4j-new.jpeg

Normal file

BIN

ollama4j-new.jpeg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 67 KiB |

22

pom.xml

22

pom.xml

@@ -19,7 +19,7 @@

|

||||

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

|

||||

<maven-surefire-plugin.version>3.5.4</maven-surefire-plugin.version>

|

||||

<maven-failsafe-plugin.version>3.5.4</maven-failsafe-plugin.version>

|

||||

<lombok.version>1.18.40</lombok.version>

|

||||

<lombok.version>1.18.42</lombok.version>

|

||||

</properties>

|

||||

|

||||

<developers>

|

||||

@@ -80,6 +80,7 @@

|

||||

<configuration>

|

||||

<!-- to disable the "missing" warnings. Remove the doclint to enable warnings-->

|

||||

<doclint>all,-missing</doclint>

|

||||

<failOnError>false</failOnError>

|

||||

</configuration>

|

||||

<executions>

|

||||

<execution>

|

||||

@@ -259,6 +260,11 @@

|

||||

<artifactId>jackson-databind</artifactId>

|

||||

<version>2.20.0</version>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>com.fasterxml.jackson.dataformat</groupId>

|

||||

<artifactId>jackson-dataformat-yaml</artifactId>

|

||||

<version>2.20.0</version>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>com.fasterxml.jackson.datatype</groupId>

|

||||

<artifactId>jackson-datatype-jsr310</artifactId>

|

||||

@@ -267,7 +273,7 @@

|

||||

<dependency>

|

||||

<groupId>ch.qos.logback</groupId>

|

||||

<artifactId>logback-classic</artifactId>

|

||||

<version>1.5.18</version>

|

||||

<version>1.5.20</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

@@ -275,11 +281,10 @@

|

||||

<artifactId>slf4j-api</artifactId>

|

||||

<version>2.0.17</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.junit.jupiter</groupId>

|

||||

<artifactId>junit-jupiter-api</artifactId>

|

||||

<version>5.13.4</version>

|

||||

<version>6.0.0</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

@@ -294,7 +299,6 @@

|

||||

<version>20250517</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.testcontainers</groupId>

|

||||

<artifactId>ollama</artifactId>

|

||||

@@ -307,14 +311,12 @@

|

||||

<version>1.21.3</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

|

||||

<!-- Prometheus metrics dependencies -->

|

||||

<dependency>

|

||||

<groupId>io.prometheus</groupId>

|

||||

<artifactId>simpleclient</artifactId>

|

||||

<version>0.16.0</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>com.google.guava</groupId>

|

||||

<artifactId>guava</artifactId>

|

||||

@@ -345,7 +347,7 @@

|

||||

<plugin>

|

||||

<groupId>org.sonatype.central</groupId>

|

||||

<artifactId>central-publishing-maven-plugin</artifactId>

|

||||

<version>0.8.0</version>

|

||||

<version>0.9.0</version>

|

||||

<extensions>true</extensions>

|

||||

<configuration>

|

||||

<publishingServerId>mvn-repo-id</publishingServerId>

|

||||

@@ -371,7 +373,7 @@

|

||||

<plugin>

|

||||

<groupId>org.jacoco</groupId>

|

||||

<artifactId>jacoco-maven-plugin</artifactId>

|

||||

<version>0.8.13</version>

|

||||

<version>0.8.14</version>

|

||||

<executions>

|

||||

<execution>

|

||||

<goals>

|

||||

@@ -482,7 +484,7 @@

|

||||

<plugin>

|

||||

<groupId>org.jacoco</groupId>

|

||||

<artifactId>jacoco-maven-plugin</artifactId>

|

||||

<version>0.8.13</version>

|

||||

<version>0.8.14</version>

|

||||

<executions>

|

||||

<execution>

|

||||

<goals>

|

||||

|

||||

@@ -70,10 +70,14 @@ public class Ollama {

|

||||

*/

|

||||

@Setter private long requestTimeoutSeconds = 10;

|

||||

|

||||

/** The read timeout in seconds for image URLs. */

|

||||

/**

|

||||

* The read timeout in seconds for image URLs.

|

||||

*/

|

||||

@Setter private int imageURLReadTimeoutSeconds = 10;

|

||||

|

||||

/** The connect timeout in seconds for image URLs. */

|

||||

/**

|

||||

* The connect timeout in seconds for image URLs.

|

||||

*/

|

||||

@Setter private int imageURLConnectTimeoutSeconds = 10;

|

||||

|

||||

/**

|

||||

@@ -280,9 +284,9 @@ public class Ollama {

|

||||

/**

|

||||

* Handles retry backoff for pullModel.

|

||||

*

|

||||

* @param modelName the name of the model being pulled

|

||||

* @param currentRetry the current retry attempt (zero-based)

|

||||

* @param maxRetries the maximum number of retries allowed

|

||||

* @param modelName the name of the model being pulled

|

||||

* @param currentRetry the current retry attempt (zero-based)

|

||||

* @param maxRetries the maximum number of retries allowed

|

||||

* @param baseDelayMillis the base delay in milliseconds for exponential backoff

|

||||

* @throws InterruptedException if the thread is interrupted during sleep

|

||||

*/

|

||||

@@ -376,7 +380,7 @@ public class Ollama {

|

||||

* Returns true if the response indicates a successful pull.

|

||||

*

|

||||

* @param modelPullResponse the response from the model pull

|

||||

* @param modelName the name of the model

|

||||

* @param modelName the name of the model

|

||||

* @return true if the pull was successful, false otherwise

|

||||

* @throws OllamaException if the response contains an error

|

||||

*/

|

||||

@@ -601,7 +605,7 @@ public class Ollama {

|

||||

/**

|

||||

* Deletes a model from the Ollama server.

|

||||

*

|

||||

* @param modelName the name of the model to be deleted

|

||||

* @param modelName the name of the model to be deleted

|

||||

* @param ignoreIfNotPresent ignore errors if the specified model is not present on the Ollama server

|

||||

* @throws OllamaException if the response indicates an error status

|

||||

*/

|

||||

@@ -758,7 +762,7 @@ public class Ollama {

|

||||

* Generates a response from a model using the specified parameters and stream observer.

|

||||

* If {@code streamObserver} is provided, streaming is enabled; otherwise, a synchronous call is made.

|

||||

*

|

||||

* @param request the generation request

|

||||

* @param request the generation request

|

||||

* @param streamObserver the stream observer for streaming responses, or null for synchronous

|

||||

* @return the result of the generation

|

||||

* @throws OllamaException if the request fails

|

||||

@@ -801,6 +805,7 @@ public class Ollama {

|

||||

chatRequest.setMessages(msgs);

|

||||

msgs.add(ocm);

|

||||

OllamaChatTokenHandler hdlr = null;

|

||||

chatRequest.setUseTools(true);

|

||||

chatRequest.setTools(request.getTools());

|

||||

if (streamObserver != null) {

|

||||

chatRequest.setStream(true);

|

||||

@@ -823,10 +828,10 @@ public class Ollama {

|

||||

/**

|

||||

* Generates a response from a model asynchronously, returning a streamer for results.

|

||||

*

|

||||

* @param model the model name

|

||||

* @param model the model name

|

||||

* @param prompt the prompt to send

|

||||

* @param raw whether to use raw mode

|

||||

* @param think whether to use "think" mode

|

||||

* @param raw whether to use raw mode

|

||||

* @param think whether to use "think" mode

|

||||

* @return an OllamaAsyncResultStreamer for streaming results

|

||||

* @throws OllamaException if the request fails

|

||||

*/

|

||||

@@ -857,13 +862,13 @@ public class Ollama {

|

||||

|

||||

/**

|

||||

* Sends a chat request to a model using an {@link OllamaChatRequest} and sets up streaming response.

|

||||

* This can be constructed using an {@link OllamaChatRequestBuilder}.

|

||||

* This can be constructed using an {@link OllamaChatRequest#builder()}.

|

||||

*

|

||||

* <p>Note: the OllamaChatRequestModel#getStream() property is not implemented.

|

||||

*

|

||||

* @param request request object to be sent to the server

|

||||

* @param request request object to be sent to the server

|

||||

* @param tokenHandler callback handler to handle the last token from stream (caution: the

|

||||

* previous tokens from stream will not be concatenated)

|

||||

* previous tokens from stream will not be concatenated)

|

||||

* @return {@link OllamaChatResult}

|

||||

* @throws OllamaException if the response indicates an error status

|

||||

*/

|

||||

@@ -877,7 +882,7 @@ public class Ollama {

|

||||

// only add tools if tools flag is set

|

||||

if (request.isUseTools()) {

|

||||

// add all registered tools to request

|

||||

request.setTools(toolRegistry.getRegisteredTools());

|

||||

request.getTools().addAll(toolRegistry.getRegisteredTools());

|

||||

}

|

||||

|

||||

if (tokenHandler != null) {

|

||||

@@ -958,12 +963,16 @@ public class Ollama {

|

||||

* Registers multiple tools in the tool registry.

|

||||

*

|

||||

* @param tools a list of {@link Tools.Tool} objects to register. Each tool contains its

|

||||

* specification and function.

|

||||

* specification and function.

|

||||

*/

|

||||

public void registerTools(List<Tools.Tool> tools) {

|

||||

toolRegistry.addTools(tools);

|

||||

}

|

||||

|

||||

public List<Tools.Tool> getRegisteredTools() {

|

||||

return toolRegistry.getRegisteredTools();

|

||||

}

|

||||

|

||||

/**

|

||||

* Deregisters all tools from the tool registry. This method removes all registered tools,

|

||||

* effectively clearing the registry.

|

||||

@@ -979,7 +988,7 @@ public class Ollama {

|

||||

* and recursively registers annotated tools from all the providers specified in the annotation.

|

||||

*

|

||||

* @throws OllamaException if the caller's class is not annotated with {@link

|

||||

* OllamaToolService} or if reflection-based instantiation or invocation fails

|

||||

* OllamaToolService} or if reflection-based instantiation or invocation fails

|

||||

*/

|

||||

public void registerAnnotatedTools() throws OllamaException {

|

||||

try {

|

||||

@@ -1127,7 +1136,7 @@ public class Ollama {

|

||||

* This method synchronously calls the Ollama API. If a stream handler is provided,

|

||||

* the request will be streamed; otherwise, a regular synchronous request will be made.

|

||||

*

|

||||

* @param ollamaRequestModel the request model containing necessary parameters for the Ollama API request

|

||||

* @param ollamaRequestModel the request model containing necessary parameters for the Ollama API request

|

||||

* @param thinkingStreamHandler the stream handler for "thinking" tokens, or null if not used

|

||||

* @param responseStreamHandler the stream handler to process streaming responses, or null for non-streaming requests

|

||||

* @return the result of the Ollama API request

|

||||

|

||||

290

src/main/java/io/github/ollama4j/agent/Agent.java

Normal file

290

src/main/java/io/github/ollama4j/agent/Agent.java

Normal file

@@ -0,0 +1,290 @@

|

||||

/*

|

||||

* Ollama4j - Java library for interacting with Ollama server.

|

||||

* Copyright (c) 2025 Amith Koujalgi and contributors.

|

||||

*

|

||||

* Licensed under the MIT License (the "License");

|

||||

* you may not use this file except in compliance with the License.

|

||||

*

|

||||

*/

|

||||

package io.github.ollama4j.agent;

|

||||

|

||||

import com.fasterxml.jackson.databind.ObjectMapper;

|

||||

import com.fasterxml.jackson.dataformat.yaml.YAMLFactory;

|

||||

import io.github.ollama4j.Ollama;

|

||||

import io.github.ollama4j.exceptions.OllamaException;

|

||||

import io.github.ollama4j.impl.ConsoleOutputGenerateTokenHandler;

|

||||

import io.github.ollama4j.models.chat.*;

|

||||

import io.github.ollama4j.tools.ToolFunction;

|

||||

import io.github.ollama4j.tools.Tools;

|

||||

import java.io.InputStream;

|

||||

import java.util.ArrayList;

|

||||

import java.util.List;

|

||||

import java.util.Scanner;

|

||||

import lombok.*;

|

||||

|

||||

/**

|

||||

* The {@code Agent} class represents an AI assistant capable of interacting with the Ollama API

|

||||

* server.

|

||||

*

|

||||

* <p>It supports the use of tools (interchangeable code components), persistent chat history, and

|

||||

* interactive as well as pre-scripted chat sessions.

|

||||

*

|

||||

* <h2>Usage</h2>

|

||||

*

|

||||

* <ul>

|

||||

* <li>Instantiate an Agent via {@link #load(String)} for YAML-based configuration.

|

||||

* <li>Handle conversation turns via {@link #interact(String, OllamaChatStreamObserver)}.

|

||||

* <li>Use {@link #runInteractive()} for an interactive console-based session.

|

||||

* </ul>

|

||||

*/

|

||||

public class Agent {

|

||||

/** The agent's display name */

|

||||

private final String name;

|

||||

|

||||

/** List of supported tools for this agent */

|

||||

private final List<Tools.Tool> tools;

|

||||

|

||||

/** Ollama client instance for communication with the API */

|

||||

private final Ollama ollamaClient;

|

||||

|

||||

/** The model name used for chat completions */

|

||||

private final String model;

|

||||

|

||||

/** Persists chat message history across rounds */

|

||||

private final List<OllamaChatMessage> chatHistory;

|

||||

|

||||

/** Optional custom system prompt for the agent */

|

||||

private final String customPrompt;

|

||||

|

||||

/**

|

||||

* Constructs a new Agent.

|

||||

*

|

||||

* @param name The agent's given name.

|

||||

* @param ollamaClient The Ollama API client instance to use.

|

||||

* @param model The model name to use for chat completion.

|

||||

* @param customPrompt A custom prompt to prepend to all conversations (may be null).

|

||||

* @param tools List of available tools for function calling.

|

||||

*/

|

||||

public Agent(

|

||||

String name,

|

||||

Ollama ollamaClient,

|

||||

String model,

|

||||

String customPrompt,

|

||||

List<Tools.Tool> tools) {

|

||||

this.name = name;

|

||||

this.ollamaClient = ollamaClient;

|

||||

this.chatHistory = new ArrayList<>();

|

||||

this.tools = tools;

|

||||

this.model = model;

|

||||

this.customPrompt = customPrompt;

|

||||

}

|

||||

|

||||

/**

|

||||

* Loads and constructs an Agent from a YAML configuration file (classpath or filesystem).

|

||||

*

|

||||

* <p>The YAML should define the agent, the model, and the desired tool functions (using their

|

||||

* fully qualified class names for auto-discovery).

|

||||

*

|

||||

* @param yamlPathOrResource Path or classpath resource name of the YAML file.

|

||||

* @return New Agent instance loaded according to the YAML definition.

|

||||

* @throws RuntimeException if the YAML cannot be read or agent cannot be constructed.

|

||||

*/

|

||||

public static Agent load(String yamlPathOrResource) {

|

||||

try {

|

||||

ObjectMapper mapper = new ObjectMapper(new YAMLFactory());

|

||||

|

||||

InputStream input =

|

||||

Agent.class.getClassLoader().getResourceAsStream(yamlPathOrResource);

|

||||

if (input == null) {

|

||||

java.nio.file.Path filePath = java.nio.file.Paths.get(yamlPathOrResource);

|

||||

if (java.nio.file.Files.exists(filePath)) {

|

||||

input = java.nio.file.Files.newInputStream(filePath);

|

||||

} else {

|

||||

throw new RuntimeException(

|

||||

yamlPathOrResource + " not found in classpath or file system");

|

||||

}

|

||||

}

|

||||

AgentSpec agentSpec = mapper.readValue(input, AgentSpec.class);

|

||||

List<AgentToolSpec> tools = agentSpec.getTools();

|

||||

for (AgentToolSpec tool : tools) {

|

||||

String fqcn = tool.getToolFunctionFQCN();

|

||||

if (fqcn != null && !fqcn.isEmpty()) {

|

||||

try {

|

||||

Class<?> clazz = Class.forName(fqcn);

|

||||

Object instance = clazz.getDeclaredConstructor().newInstance();

|

||||

if (instance instanceof ToolFunction) {

|

||||

tool.setToolFunctionInstance((ToolFunction) instance);

|

||||

} else {

|

||||

throw new RuntimeException(

|

||||

"Class does not implement ToolFunction: " + fqcn);

|

||||

}

|

||||

} catch (Exception e) {

|

||||

throw new RuntimeException(

|

||||

"Failed to instantiate tool function: " + fqcn, e);

|

||||

}

|

||||

}

|

||||

}

|

||||

List<Tools.Tool> agentTools = new ArrayList<>();

|

||||

for (AgentToolSpec a : tools) {

|

||||

Tools.Tool t = new Tools.Tool();

|

||||

t.setToolFunction(a.getToolFunctionInstance());

|

||||

Tools.ToolSpec ts = new Tools.ToolSpec();

|

||||

ts.setName(a.getName());

|

||||

ts.setDescription(a.getDescription());

|

||||

ts.setParameters(a.getParameters());

|

||||

t.setToolSpec(ts);

|

||||

agentTools.add(t);

|

||||

}

|

||||

Ollama ollama = new Ollama(agentSpec.getHost());

|

||||

ollama.setRequestTimeoutSeconds(120);

|

||||

ollama.pullModel(agentSpec.getModel());

|

||||

return new Agent(

|

||||

agentSpec.getName(),

|

||||

ollama,

|

||||

agentSpec.getModel(),

|

||||

agentSpec.getCustomPrompt(),

|

||||

agentTools);

|

||||

} catch (Exception e) {

|

||||

throw new RuntimeException("Failed to load agent from YAML", e);

|

||||

}

|

||||

}

|

||||

|

||||

/**

|

||||

* Conducts a conversational interaction with the agent.

|

||||

*

|

||||

* @param userInput the user's question, instruction, or message for the agent.

|

||||

* @param chatTokenHandler an optional handler for receiving streaming token updates from the model as it generates a reply.

|

||||

* Can be {@code null} if streaming output is not needed.

|

||||

* @return Updated chat history, as a list of {@link OllamaChatMessage} objects representing the complete conversation so far.

|

||||

* This includes system, user, assistant, and any tool/function calls/results.

|

||||

* @throws OllamaException if an error occurs communicating with the Ollama API or running tools.

|

||||

*/

|

||||

public List<OllamaChatMessage> interact(

|

||||

String userInput, OllamaChatStreamObserver chatTokenHandler) throws OllamaException {

|

||||

// Build a concise and readable description of available tools

|

||||

String availableToolsDescription =

|

||||

tools.isEmpty()

|

||||

? ""

|

||||

: tools.stream()

|

||||

.map(

|

||||

t ->

|

||||

String.format(

|

||||

"- %s: %s",

|

||||

t.getToolSpec().getName(),

|

||||

t.getToolSpec().getDescription() != null

|

||||

? t.getToolSpec().getDescription()

|

||||

: "No description"))

|

||||

.reduce((a, b) -> a + "\n" + b)

|

||||

.map(desc -> "\nYou have access to the following tools:\n" + desc)

|

||||

.orElse("");

|

||||

|

||||

// Add system prompt if chatHistory is empty

|

||||

if (chatHistory.isEmpty()) {

|

||||

String systemPrompt =

|

||||

String.format(

|

||||

"You are a helpful AI assistant named %s. Your actions are limited to"

|

||||

+ " using the available tools. %s%s",

|

||||

name,

|

||||

(customPrompt != null ? customPrompt : ""),

|

||||

availableToolsDescription);

|

||||

chatHistory.add(new OllamaChatMessage(OllamaChatMessageRole.SYSTEM, systemPrompt));

|

||||

}

|

||||

|

||||

// Add the user input as a message before sending request

|

||||

chatHistory.add(new OllamaChatMessage(OllamaChatMessageRole.USER, userInput));

|

||||

|

||||

OllamaChatRequest request =

|

||||

OllamaChatRequest.builder()

|

||||

.withTools(tools)

|

||||

.withUseTools(true)

|

||||

.withModel(model)

|

||||

.withMessages(chatHistory)

|

||||

.build();

|

||||

OllamaChatResult response = ollamaClient.chat(request, chatTokenHandler);

|

||||

|

||||

chatHistory.clear();

|

||||

chatHistory.addAll(response.getChatHistory());

|

||||

|

||||

return response.getChatHistory();

|

||||

}

|

||||

|

||||

/**

|

||||

* Launches an endless interactive console session with the agent, echoing user input and the

|

||||

* agent's response using the provided chat model and tools.

|

||||

*

|

||||

* <p>Type {@code exit} to break the loop and terminate the session.

|

||||

*

|

||||

* @throws OllamaException if any errors occur talking to the Ollama API.

|

||||

*/

|

||||

public void runInteractive() throws OllamaException {

|

||||

Scanner sc = new Scanner(System.in);

|

||||

while (true) {

|

||||

System.out.print("\n[You]: ");

|

||||

String input = sc.nextLine();

|

||||

if ("exit".equalsIgnoreCase(input)) break;

|

||||

this.interact(

|

||||

input,

|

||||

new OllamaChatStreamObserver(

|

||||

new ConsoleOutputGenerateTokenHandler(),

|

||||

new ConsoleOutputGenerateTokenHandler()));

|

||||

}

|

||||

}

|

||||

|

||||

/**

|

||||

* Bean describing an agent as definable from YAML.

|

||||

*

|

||||

* <ul>

|

||||

* <li>{@code name}: Agent display name

|

||||

* <li>{@code description}: Freeform description

|

||||

* <li>{@code tools}: List of tools/functions to enable

|

||||

* <li>{@code host}: Target Ollama host address

|

||||

* <li>{@code model}: Name of Ollama model to use

|

||||

* <li>{@code customPrompt}: Agent's custom base prompt

|

||||

* <li>{@code requestTimeoutSeconds}: Timeout for requests

|

||||

* </ul>

|

||||

*/

|

||||

@Data

|

||||

public static class AgentSpec {

|

||||

private String name;

|

||||

private String description;

|

||||

private List<AgentToolSpec> tools;

|

||||

private String host;

|

||||

private String model;

|

||||

private String customPrompt;

|

||||

private int requestTimeoutSeconds;

|

||||

}

|

||||

|

||||

/**

|

||||

* Subclass extension of {@link Tools.ToolSpec}, which allows associating a tool with a function

|

||||

* implementation (via FQCN).

|

||||

*/

|

||||

@Data

|

||||

@Setter

|

||||

@Getter

|

||||

@EqualsAndHashCode(callSuper = false)

|

||||

private static class AgentToolSpec extends Tools.ToolSpec {

|

||||

/** Fully qualified class name of the tool's {@link ToolFunction} implementation */

|

||||

private String toolFunctionFQCN = null;

|

||||

|

||||

/** Instance of the {@link ToolFunction} to invoke */

|

||||

private ToolFunction toolFunctionInstance = null;

|

||||

}

|

||||

|

||||

/** Bean for describing a tool function parameter for use in agent YAML definitions. */

|

||||

@Data

|

||||

public class AgentToolParameter {

|

||||

/** The parameter's type (e.g., string, number, etc.) */

|

||||

private String type;

|

||||

|

||||

/** Description of the parameter */

|

||||

private String description;

|

||||

|

||||

/** Whether this parameter is required */

|

||||

private boolean required;

|

||||

|

||||

/**

|

||||

* Enum values (if any) that this parameter may take; _enum used because 'enum' is reserved

|

||||

*/

|

||||

private List<String> _enum; // `enum` is a reserved keyword, so use _enum or similar

|

||||

}

|

||||

}

|

||||

@@ -11,6 +11,9 @@ package io.github.ollama4j.models.chat;

|

||||

import io.github.ollama4j.models.request.OllamaCommonRequest;

|

||||

import io.github.ollama4j.tools.Tools;

|

||||

import io.github.ollama4j.utils.OllamaRequestBody;

|

||||

import io.github.ollama4j.utils.Options;

|

||||

import java.io.File;

|

||||

import java.util.ArrayList;

|

||||

import java.util.Collections;

|

||||

import java.util.List;

|

||||

import lombok.Getter;

|

||||

@@ -20,8 +23,8 @@ import lombok.Setter;

|

||||

* Defines a Request to use against the ollama /api/chat endpoint.

|

||||

*

|

||||

* @see <a href=

|

||||

* "https://github.com/ollama/ollama/blob/main/docs/api.md#generate-a-chat-completion">Generate

|

||||

* Chat Completion</a>

|