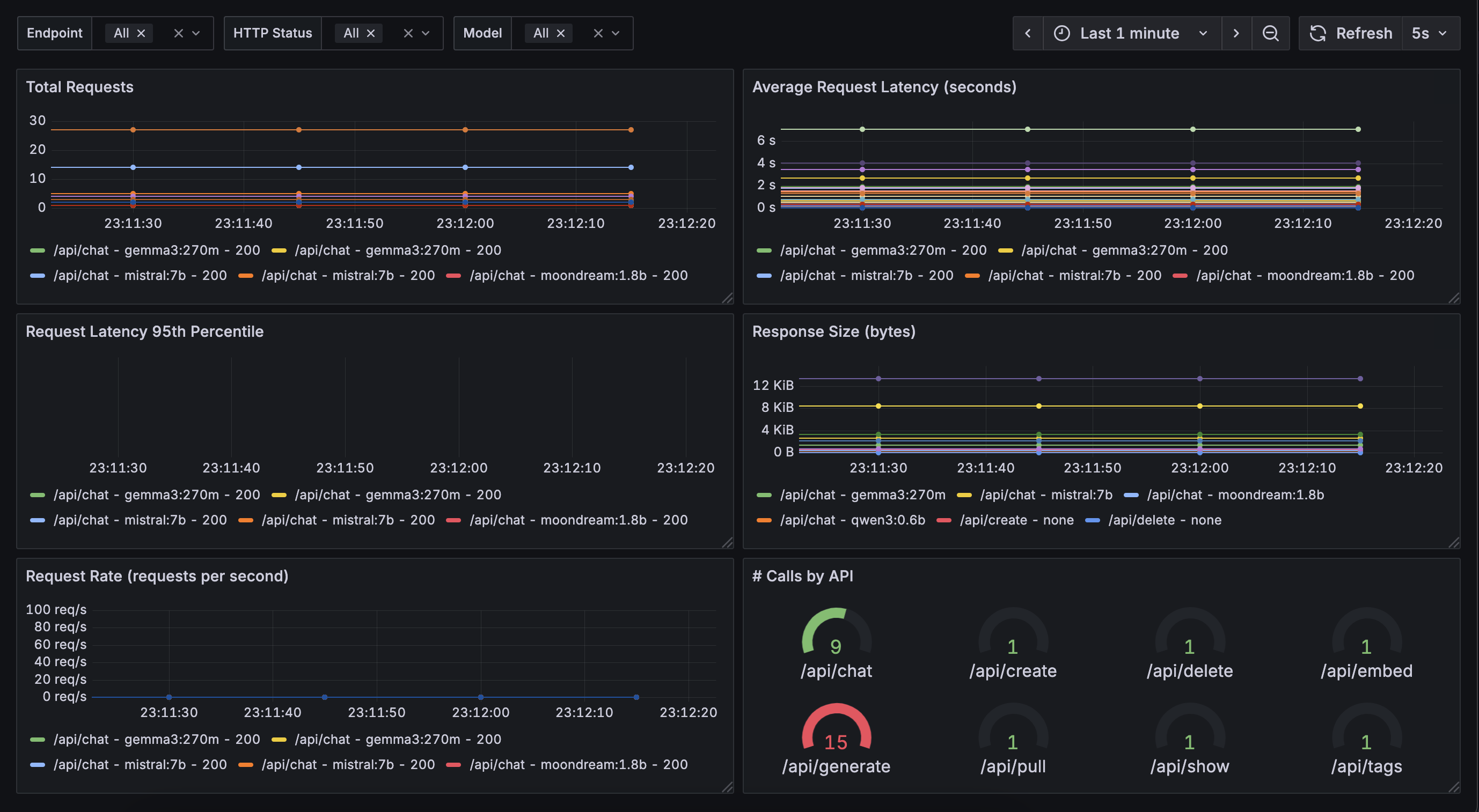

- Introduced a new `metrics.md` file detailing the metrics available in the Ollama4j library, including total requests, response time, error rate, and active sessions. - Provided examples of accessing metrics in Java and integrating with monitoring tools like Prometheus and Grafana. - Included a warning note indicating that the documentation is a work in progress.

Ollama4j

Table of Contents

- Capabilities

- How does it work?

- Requirements

- Usage

- API Spec

- Examples

- Development

- Get Involved

- Who's using Ollama4j?

- Growth

Capabilities

- Text generation: Single-turn

generatewith optional streaming and advanced options - Chat: Multi-turn chat with conversation history and roles

- Tool/function calling: Built-in tool invocation via annotations and tool specs

- Reasoning/thinking modes: Generate and chat with “thinking” outputs where supported

- Image inputs (multimodal): Generate with images as inputs where models support vision

- Embeddings: Create vector embeddings for text

- Async generation: Fire-and-forget style generation APIs

- Custom roles: Define and use custom chat roles

- Model management: List, pull, create, delete, and get model details

- Connectivity utilities: Server

pingand process status (ps) - Authentication: Basic auth and bearer token support

- Options builder: Type-safe builder for model parameters and request options

- Timeouts: Configure connect/read/write timeouts

- Logging: Built-in logging hooks for requests and responses

- Metrics & Monitoring 🆕: Built-in Prometheus metrics export for real-time monitoring of requests, model usage, and performance. (Beta feature – feedback/contributions welcome!) - Checkout ollama4j-examples repository for details.

How does it work?

flowchart LR

o4j[Ollama4j]

o[Ollama Server]

o4j -->|Communicates with| o;

m[Models]

subgraph Ollama Deployment

direction TB

o -->|Manages| m

end

Requirements

Usage

Note

We are now publishing the artifacts to both Maven Central and GitHub package repositories.

Track the releases here and update the dependency version according to your requirements.

For Maven

Using Maven Central

In your Maven project, add this dependency:

<dependency>

<groupId>io.github.ollama4j</groupId>

<artifactId>ollama4j</artifactId>

<version>1.1.0</version>

</dependency>

Using GitHub's Maven Package Repository

- Add

GitHub Maven Packagesrepository to your project'spom.xmlor yoursettings.xml:

<repositories>

<repository>

<id>github</id>

<name>GitHub Apache Maven Packages</name>

<url>https://maven.pkg.github.com/ollama4j/ollama4j</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

</repositories>

- Add

GitHubserver to settings.xml. (Usually available at ~/.m2/settings.xml)

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0

http://maven.apache.org/xsd/settings-1.0.0.xsd">

<servers>

<server>

<id>github</id>

<username>YOUR-USERNAME</username>

<password>YOUR-TOKEN</password>

</server>

</servers>

</settings>

- In your Maven project, add this dependency:

<dependency>

<groupId>io.github.ollama4j</groupId>

<artifactId>ollama4j</artifactId>

<version>1.1.0</version>

</dependency>

For Gradle

- Add the dependency

dependencies {

implementation 'io.github.ollama4j:ollama4j:1.1.0'

}

API Spec

Tip

Find the full API specifications on the website.

Examples

For practical examples and usage patterns of the Ollama4j library, check out the ollama4j-examples repository.

Development

Make sure you have pre-commit installed.

With brew:

brew install pre-commit

With pip:

pip install pre-commit

Setup dev environment

Note

If you're on Windows, install Chocolatey Package Manager for Windows and then install

makeby runningchoco install make. Just a little tip - run the command with administrator privileges if installation faiils.

make dev

Build

make build

Run unit tests

make unit-tests

Run integration tests

Make sure you have Docker running as this uses testcontainers to run the integration tests on Ollama Docker container.

make integration-tests

Releases

Newer artifacts are published via GitHub Actions CI workflow when a new release is created from main branch.

Get Involved

Contributions are most welcome! Whether it's reporting a bug, proposing an enhancement, or helping with code - any sort of contribution is much appreciated.

Who's using Ollama4j?

| # | Project Name | Description | Link |

|---|---|---|---|

| 1 | Datafaker | A library to generate fake data | GitHub |

| 2 | Vaadin Web UI | UI-Tester for interactions with Ollama via ollama4j | GitHub |

| 3 | ollama-translator | A Minecraft 1.20.6 Spigot plugin that translates all messages into a specific target language via Ollama | GitHub |

| 4 | AI Player | A Minecraft mod that adds an intelligent "second player" to the game | Website, GitHub, Reddit Thread |

| 5 | Ollama4j Web UI | A web UI for Ollama written in Java using Spring Boot, Vaadin, and Ollama4j | GitHub |

| 6 | JnsCLI | A command-line tool for Jenkins that manages jobs, builds, and configurations, with AI-powered error analysis | GitHub |

| 7 | Katie Backend | An open-source AI-based question-answering platform for accessing private domain knowledge | GitHub |

| 8 | TeleLlama3 Bot | A question-answering Telegram bot | Repo |

| 9 | moqui-wechat | A moqui-wechat component | GitHub |

| 10 | B4X | A set of simple and powerful RAD tool for Desktop and Server development | Website |

| 11 | Research Article | Article: Large language model based mutations in genetic improvement - published on National Library of Medicine (National Center for Biotechnology Information) |

Website |

| 12 | renaime | A LLaVa powered tool that automatically renames image files having messy file names. | Website |

Growth

References

Credits

The nomenclature and the icon have been adopted from the incredible Ollama project.

Thanks to the amazing contributors