mirror of

https://github.com/amithkoujalgi/ollama4j.git

synced 2025-10-14 17:38:58 +02:00

Compare commits

No commits in common. "main" and "1.1.0" have entirely different histories.

10

.github/CODEOWNERS

vendored

10

.github/CODEOWNERS

vendored

@ -1,10 +0,0 @@

|

|||||||

# See https://docs.github.com/repositories/managing-your-repositorys-settings-and-features/customizing-your-repository/about-code-owners

|

|

||||||

|

|

||||||

# Default owners for everything in the repo

|

|

||||||

* @amithkoujalgi

|

|

||||||

|

|

||||||

# Example for scoping ownership (uncomment and adjust as teams evolve)

|

|

||||||

# /docs/ @amithkoujalgi

|

|

||||||

# /src/ @amithkoujalgi

|

|

||||||

|

|

||||||

|

|

||||||

59

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

59

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

@ -1,59 +0,0 @@

|

|||||||

name: Bug report

|

|

||||||

description: File a bug report

|

|

||||||

labels: [bug]

|

|

||||||

assignees: []

|

|

||||||

body:

|

|

||||||

- type: markdown

|

|

||||||

attributes:

|

|

||||||

value: |

|

|

||||||

Thanks for taking the time to fill out this bug report!

|

|

||||||

- type: input

|

|

||||||

id: version

|

|

||||||

attributes:

|

|

||||||

label: ollama4j version

|

|

||||||

description: e.g., 1.1.0

|

|

||||||

placeholder: 1.1.0

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

id: java

|

|

||||||

attributes:

|

|

||||||

label: Java version

|

|

||||||

description: Output of `java -version`

|

|

||||||

placeholder: 11/17/21

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

id: environment

|

|

||||||

attributes:

|

|

||||||

label: Environment

|

|

||||||

description: OS, build tool, Docker/Testcontainers, etc.

|

|

||||||

placeholder: macOS 13, Maven 3.9.x, Docker 24.x

|

|

||||||

- type: textarea

|

|

||||||

id: what-happened

|

|

||||||

attributes:

|

|

||||||

label: What happened?

|

|

||||||

description: Also tell us what you expected to happen

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: textarea

|

|

||||||

id: steps

|

|

||||||

attributes:

|

|

||||||

label: Steps to reproduce

|

|

||||||

description: Be as specific as possible

|

|

||||||

placeholder: |

|

|

||||||

1. Setup ...

|

|

||||||

2. Run ...

|

|

||||||

3. Observe ...

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: textarea

|

|

||||||

id: logs

|

|

||||||

attributes:

|

|

||||||

label: Relevant logs/stack traces

|

|

||||||

render: shell

|

|

||||||

- type: textarea

|

|

||||||

id: additional

|

|

||||||

attributes:

|

|

||||||

label: Additional context

|

|

||||||

|

|

||||||

6

.github/ISSUE_TEMPLATE/config.yml

vendored

6

.github/ISSUE_TEMPLATE/config.yml

vendored

@ -1,6 +0,0 @@

|

|||||||

blank_issues_enabled: false

|

|

||||||

contact_links:

|

|

||||||

- name: Questions / Discussions

|

|

||||||

url: https://github.com/ollama4j/ollama4j/discussions

|

|

||||||

about: Ask questions and discuss ideas here

|

|

||||||

|

|

||||||

31

.github/ISSUE_TEMPLATE/feature_request.yml

vendored

31

.github/ISSUE_TEMPLATE/feature_request.yml

vendored

@ -1,31 +0,0 @@

|

|||||||

name: Feature request

|

|

||||||

description: Suggest an idea or enhancement

|

|

||||||

labels: [enhancement]

|

|

||||||

assignees: []

|

|

||||||

body:

|

|

||||||

- type: markdown

|

|

||||||

attributes:

|

|

||||||

value: |

|

|

||||||

Thanks for suggesting an improvement!

|

|

||||||

- type: textarea

|

|

||||||

id: problem

|

|

||||||

attributes:

|

|

||||||

label: Is your feature request related to a problem?

|

|

||||||

description: A clear and concise description of the problem

|

|

||||||

placeholder: I'm frustrated when...

|

|

||||||

- type: textarea

|

|

||||||

id: solution

|

|

||||||

attributes:

|

|

||||||

label: Describe the solution you'd like

|

|

||||||

placeholder: I'd like...

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: textarea

|

|

||||||

id: alternatives

|

|

||||||

attributes:

|

|

||||||

label: Describe alternatives you've considered

|

|

||||||

- type: textarea

|

|

||||||

id: context

|

|

||||||

attributes:

|

|

||||||

label: Additional context

|

|

||||||

|

|

||||||

34

.github/PULL_REQUEST_TEMPLATE.md

vendored

34

.github/PULL_REQUEST_TEMPLATE.md

vendored

@ -1,34 +0,0 @@

|

|||||||

## Description

|

|

||||||

|

|

||||||

Describe what this PR does and why.

|

|

||||||

|

|

||||||

## Type of change

|

|

||||||

|

|

||||||

- [ ] feat: New feature

|

|

||||||

- [ ] fix: Bug fix

|

|

||||||

- [ ] docs: Documentation update

|

|

||||||

- [ ] refactor: Refactoring

|

|

||||||

- [ ] test: Tests only

|

|

||||||

- [ ] build/ci: Build or CI changes

|

|

||||||

|

|

||||||

## How has this been tested?

|

|

||||||

|

|

||||||

Explain the testing done. Include commands, screenshots, logs.

|

|

||||||

|

|

||||||

## Checklist

|

|

||||||

|

|

||||||

- [ ] I ran `pre-commit run -a` locally

|

|

||||||

- [ ] `make build` succeeds locally

|

|

||||||

- [ ] Unit/integration tests added or updated as needed

|

|

||||||

- [ ] Docs updated (README/docs site) if user-facing changes

|

|

||||||

- [ ] PR title follows Conventional Commits

|

|

||||||

|

|

||||||

## Breaking changes

|

|

||||||

|

|

||||||

List any breaking changes and migration notes.

|

|

||||||

|

|

||||||

## Related issues

|

|

||||||

|

|

||||||

Fixes #

|

|

||||||

|

|

||||||

|

|

||||||

34

.github/dependabot.yml

vendored

34

.github/dependabot.yml

vendored

@ -1,34 +0,0 @@

|

|||||||

# To get started with Dependabot version updates, you'll need to specify which

|

|

||||||

## package ecosystems to update and where the package manifests are located.

|

|

||||||

## Please see the documentation for all configuration options:

|

|

||||||

## https://docs.github.com/code-security/dependabot/dependabot-version-updates/configuration-options-for-the-dependabot.yml-file

|

|

||||||

#

|

|

||||||

#version: 2

|

|

||||||

#updates:

|

|

||||||

# - package-ecosystem: "" # See documentation for possible values

|

|

||||||

# directory: "/" # Location of package manifests

|

|

||||||

# schedule:

|

|

||||||

# interval: "weekly"

|

|

||||||

|

|

||||||

|

|

||||||

version: 2

|

|

||||||

updates:

|

|

||||||

- package-ecosystem: "maven"

|

|

||||||

directory: "/"

|

|

||||||

schedule:

|

|

||||||

interval: "weekly"

|

|

||||||

open-pull-requests-limit: 5

|

|

||||||

labels: ["dependencies"]

|

|

||||||

- package-ecosystem: "github-actions"

|

|

||||||

directory: "/"

|

|

||||||

schedule:

|

|

||||||

interval: "weekly"

|

|

||||||

open-pull-requests-limit: 5

|

|

||||||

labels: ["dependencies"]

|

|

||||||

- package-ecosystem: "npm"

|

|

||||||

directory: "/docs"

|

|

||||||

schedule:

|

|

||||||

interval: "weekly"

|

|

||||||

open-pull-requests-limit: 5

|

|

||||||

labels: ["dependencies"]

|

|

||||||

#

|

|

||||||

18

.github/workflows/build-on-pull-request.yml

vendored

18

.github/workflows/build-on-pull-request.yml

vendored

@ -20,17 +20,13 @@ jobs:

|

|||||||

permissions:

|

permissions:

|

||||||

contents: read

|

contents: read

|

||||||

|

|

||||||

environment:

|

|

||||||

name: github-pages

|

|

||||||

url: ${{ steps.deployment.outputs.page_url }}

|

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v5

|

- uses: actions/checkout@v3

|

||||||

- name: Set up JDK 21

|

- name: Set up JDK 11

|

||||||

uses: actions/setup-java@v5

|

uses: actions/setup-java@v3

|

||||||

with:

|

with:

|

||||||

java-version: '21'

|

java-version: '11'

|

||||||

distribution: 'oracle'

|

distribution: 'adopt-hotspot'

|

||||||

server-id: github

|

server-id: github

|

||||||

settings-path: ${{ github.workspace }}

|

settings-path: ${{ github.workspace }}

|

||||||

|

|

||||||

@ -50,9 +46,9 @@ jobs:

|

|||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v5

|

- uses: actions/checkout@v3

|

||||||

- name: Use Node.js

|

- name: Use Node.js

|

||||||

uses: actions/setup-node@v5

|

uses: actions/setup-node@v3

|

||||||

with:

|

with:

|

||||||

node-version: '20.x'

|

node-version: '20.x'

|

||||||

- run: cd docs && npm ci

|

- run: cd docs && npm ci

|

||||||

|

|||||||

44

.github/workflows/codeql.yml

vendored

44

.github/workflows/codeql.yml

vendored

@ -1,44 +0,0 @@

|

|||||||

name: CodeQL

|

|

||||||

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

branches: [ main ]

|

|

||||||

pull_request:

|

|

||||||

branches: [ main ]

|

|

||||||

schedule:

|

|

||||||

- cron: '0 3 * * 1'

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

analyze:

|

|

||||||

name: Analyze

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

permissions:

|

|

||||||

actions: read

|

|

||||||

contents: read

|

|

||||||

security-events: write

|

|

||||||

strategy:

|

|

||||||

fail-fast: false

|

|

||||||

matrix:

|

|

||||||

language: [ 'java', 'javascript' ]

|

|

||||||

steps:

|

|

||||||

- name: Checkout repository

|

|

||||||

uses: actions/checkout@v5

|

|

||||||

|

|

||||||

- name: Set up JDK

|

|

||||||

if: matrix.language == 'java'

|

|

||||||

uses: actions/setup-java@v5

|

|

||||||

with:

|

|

||||||

distribution: oracle

|

|

||||||

java-version: '21'

|

|

||||||

|

|

||||||

- name: Initialize CodeQL

|

|

||||||

uses: github/codeql-action/init@v3

|

|

||||||

with:

|

|

||||||

languages: ${{ matrix.language }}

|

|

||||||

|

|

||||||

- name: Autobuild

|

|

||||||

uses: github/codeql-action/autobuild@v3

|

|

||||||

|

|

||||||

- name: Perform CodeQL Analysis

|

|

||||||

uses: github/codeql-action/analyze@v3

|

|

||||||

|

|

||||||

10

.github/workflows/gh-mvn-publish.yml

vendored

10

.github/workflows/gh-mvn-publish.yml

vendored

@ -13,12 +13,12 @@ jobs:

|

|||||||

packages: write

|

packages: write

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v5

|

- uses: actions/checkout@v3

|

||||||

- name: Set up JDK 21

|

- name: Set up JDK 17

|

||||||

uses: actions/setup-java@v5

|

uses: actions/setup-java@v3

|

||||||

with:

|

with:

|

||||||

java-version: '21'

|

java-version: '17'

|

||||||

distribution: 'oracle'

|

distribution: 'temurin'

|

||||||

server-id: github

|

server-id: github

|

||||||

settings-path: ${{ github.workspace }}

|

settings-path: ${{ github.workspace }}

|

||||||

|

|

||||||

|

|||||||

2

.github/workflows/label-issue-stale.yml

vendored

2

.github/workflows/label-issue-stale.yml

vendored

@ -14,7 +14,7 @@ jobs:

|

|||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

steps:

|

steps:

|

||||||

- name: Mark stale issues

|

- name: Mark stale issues

|

||||||

uses: actions/stale@v10

|

uses: actions/stale@v8

|

||||||

with:

|

with:

|

||||||

repo-token: ${{ github.token }}

|

repo-token: ${{ github.token }}

|

||||||

days-before-stale: 15

|

days-before-stale: 15

|

||||||

|

|||||||

10

.github/workflows/maven-publish.yml

vendored

10

.github/workflows/maven-publish.yml

vendored

@ -24,13 +24,13 @@ jobs:

|

|||||||

packages: write

|

packages: write

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v5

|

- uses: actions/checkout@v3

|

||||||

|

|

||||||

- name: Set up JDK 21

|

- name: Set up JDK 17

|

||||||

uses: actions/setup-java@v5

|

uses: actions/setup-java@v3

|

||||||

with:

|

with:

|

||||||

java-version: '21'

|

java-version: '17'

|

||||||

distribution: 'oracle'

|

distribution: 'temurin'

|

||||||

server-id: github # Value of the distributionManagement/repository/id field of the pom.xml

|

server-id: github # Value of the distributionManagement/repository/id field of the pom.xml

|

||||||

settings-path: ${{ github.workspace }} # location for the settings.xml file

|

settings-path: ${{ github.workspace }} # location for the settings.xml file

|

||||||

|

|

||||||

|

|||||||

30

.github/workflows/pre-commit.yml

vendored

30

.github/workflows/pre-commit.yml

vendored

@ -1,30 +0,0 @@

|

|||||||

name: Pre-commit Check on PR

|

|

||||||

|

|

||||||

on:

|

|

||||||

pull_request:

|

|

||||||

types: [opened, reopened, synchronize]

|

|

||||||

branches:

|

|

||||||

- main

|

|

||||||

|

|

||||||

#on:

|

|

||||||

# pull_request:

|

|

||||||

# branches: [ main ]

|

|

||||||

# push:

|

|

||||||

# branches: [ main ]

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

run:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v5

|

|

||||||

- uses: actions/setup-python@v6

|

|

||||||

with:

|

|

||||||

python-version: '3.x'

|

|

||||||

- name: Install pre-commit

|

|

||||||

run: |

|

|

||||||

python -m pip install --upgrade pip

|

|

||||||

pip install pre-commit

|

|

||||||

# - name: Run pre-commit

|

|

||||||

# run: |

|

|

||||||

# pre-commit run --all-files --show-diff-on-failure

|

|

||||||

|

|

||||||

18

.github/workflows/publish-docs.yml

vendored

18

.github/workflows/publish-docs.yml

vendored

@ -29,18 +29,18 @@ jobs:

|

|||||||

name: github-pages

|

name: github-pages

|

||||||

url: ${{ steps.deployment.outputs.page_url }}

|

url: ${{ steps.deployment.outputs.page_url }}

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v5

|

- uses: actions/checkout@v3

|

||||||

- name: Set up JDK 21

|

- name: Set up JDK 11

|

||||||

uses: actions/setup-java@v5

|

uses: actions/setup-java@v3

|

||||||

with:

|

with:

|

||||||

java-version: '21'

|

java-version: '11'

|

||||||

distribution: 'oracle'

|

distribution: 'adopt-hotspot'

|

||||||

server-id: github # Value of the distributionManagement/repository/id field of the pom.xml

|

server-id: github # Value of the distributionManagement/repository/id field of the pom.xml

|

||||||

settings-path: ${{ github.workspace }} # location for the settings.xml file

|

settings-path: ${{ github.workspace }} # location for the settings.xml file

|

||||||

|

|

||||||

- uses: actions/checkout@v5

|

- uses: actions/checkout@v4

|

||||||

- name: Use Node.js

|

- name: Use Node.js

|

||||||

uses: actions/setup-node@v5

|

uses: actions/setup-node@v3

|

||||||

with:

|

with:

|

||||||

node-version: '20.x'

|

node-version: '20.x'

|

||||||

- run: cd docs && npm ci

|

- run: cd docs && npm ci

|

||||||

@ -57,7 +57,7 @@ jobs:

|

|||||||

run: mvn --file pom.xml -U clean package && cp -r ./target/apidocs/. ./docs/build/apidocs

|

run: mvn --file pom.xml -U clean package && cp -r ./target/apidocs/. ./docs/build/apidocs

|

||||||

|

|

||||||

- name: Doxygen Action

|

- name: Doxygen Action

|

||||||

uses: mattnotmitt/doxygen-action@v1.12.0

|

uses: mattnotmitt/doxygen-action@v1.1.0

|

||||||

with:

|

with:

|

||||||

doxyfile-path: "./Doxyfile"

|

doxyfile-path: "./Doxyfile"

|

||||||

working-directory: "."

|

working-directory: "."

|

||||||

@ -65,7 +65,7 @@ jobs:

|

|||||||

- name: Setup Pages

|

- name: Setup Pages

|

||||||

uses: actions/configure-pages@v5

|

uses: actions/configure-pages@v5

|

||||||

- name: Upload artifact

|

- name: Upload artifact

|

||||||

uses: actions/upload-pages-artifact@v4

|

uses: actions/upload-pages-artifact@v3

|

||||||

with:

|

with:

|

||||||

# Upload entire repository

|

# Upload entire repository

|

||||||

path: './docs/build/.'

|

path: './docs/build/.'

|

||||||

|

|||||||

14

.github/workflows/run-tests.yml

vendored

14

.github/workflows/run-tests.yml

vendored

@ -28,7 +28,7 @@ jobs:

|

|||||||

|

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout target branch

|

- name: Checkout target branch

|

||||||

uses: actions/checkout@v5

|

uses: actions/checkout@v3

|

||||||

with:

|

with:

|

||||||

ref: ${{ github.event.inputs.branch }}

|

ref: ${{ github.event.inputs.branch }}

|

||||||

|

|

||||||

@ -36,19 +36,19 @@ jobs:

|

|||||||

run: |

|

run: |

|

||||||

curl -fsSL https://ollama.com/install.sh | sh

|

curl -fsSL https://ollama.com/install.sh | sh

|

||||||

|

|

||||||

- name: Set up JDK 21

|

- name: Set up JDK 17

|

||||||

uses: actions/setup-java@v5

|

uses: actions/setup-java@v3

|

||||||

with:

|

with:

|

||||||

java-version: '21'

|

java-version: '17'

|

||||||

distribution: 'oracle'

|

distribution: 'temurin'

|

||||||

server-id: github

|

server-id: github

|

||||||

settings-path: ${{ github.workspace }}

|

settings-path: ${{ github.workspace }}

|

||||||

|

|

||||||

- name: Run unit tests

|

- name: Run unit tests

|

||||||

run: make unit-tests

|

run: mvn clean test -Punit-tests

|

||||||

|

|

||||||

- name: Run integration tests

|

- name: Run integration tests

|

||||||

run: make integration-tests-basic

|

run: mvn clean verify -Pintegration-tests

|

||||||

env:

|

env:

|

||||||

USE_EXTERNAL_OLLAMA_HOST: "true"

|

USE_EXTERNAL_OLLAMA_HOST: "true"

|

||||||

OLLAMA_HOST: "http://localhost:11434"

|

OLLAMA_HOST: "http://localhost:11434"

|

||||||

33

.github/workflows/stale.yml

vendored

33

.github/workflows/stale.yml

vendored

@ -1,33 +0,0 @@

|

|||||||

name: Mark stale issues and PRs

|

|

||||||

|

|

||||||

on:

|

|

||||||

schedule:

|

|

||||||

- cron: '0 2 * * *'

|

|

||||||

|

|

||||||

permissions:

|

|

||||||

issues: write

|

|

||||||

pull-requests: write

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

stale:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/stale@v10

|

|

||||||

with:

|

|

||||||

days-before-stale: 60

|

|

||||||

days-before-close: 14

|

|

||||||

stale-issue-label: 'stale'

|

|

||||||

stale-pr-label: 'stale'

|

|

||||||

exempt-issue-labels: 'pinned,security'

|

|

||||||

exempt-pr-labels: 'pinned,security'

|

|

||||||

stale-issue-message: >

|

|

||||||

This issue has been automatically marked as stale because it has not had

|

|

||||||

recent activity. It will be closed if no further activity occurs.

|

|

||||||

close-issue-message: >

|

|

||||||

Closing this stale issue. Feel free to reopen if this is still relevant.

|

|

||||||

stale-pr-message: >

|

|

||||||

This pull request has been automatically marked as stale due to inactivity.

|

|

||||||

It will be closed if no further activity occurs.

|

|

||||||

close-pr-message: >

|

|

||||||

Closing this stale pull request. Please reopen when you're ready to continue.

|

|

||||||

|

|

||||||

@ -21,19 +21,11 @@ repos:

|

|||||||

|

|

||||||

# for commit message formatting

|

# for commit message formatting

|

||||||

- repo: https://github.com/commitizen-tools/commitizen

|

- repo: https://github.com/commitizen-tools/commitizen

|

||||||

rev: v4.9.1

|

rev: v4.8.3

|

||||||

hooks:

|

hooks:

|

||||||

- id: commitizen

|

- id: commitizen

|

||||||

stages: [commit-msg]

|

stages: [commit-msg]

|

||||||

|

|

||||||

- repo: local

|

|

||||||

hooks:

|

|

||||||

- id: format-code

|

|

||||||

name: Format Code

|

|

||||||

entry: make apply-formatting

|

|

||||||

language: system

|

|

||||||

always_run: true

|

|

||||||

|

|

||||||

# # for java code quality

|

# # for java code quality

|

||||||

# - repo: https://github.com/gherynos/pre-commit-java

|

# - repo: https://github.com/gherynos/pre-commit-java

|

||||||

# rev: v0.6.10

|

# rev: v0.6.10

|

||||||

|

|||||||

@ -1,9 +0,0 @@

|

|||||||

cff-version: 1.2.0

|

|

||||||

message: "If you use this software, please cite it as below."

|

|

||||||

authors:

|

|

||||||

- family-names: "Koujalgi"

|

|

||||||

given-names: "Amith"

|

|

||||||

title: "Ollama4j: A Java Library (Wrapper/Binding) for Ollama Server"

|

|

||||||

version: "1.1.0"

|

|

||||||

date-released: 2023-12-19

|

|

||||||

url: "https://github.com/ollama4j/ollama4j"

|

|

||||||

125

CONTRIBUTING.md

125

CONTRIBUTING.md

@ -1,125 +0,0 @@

|

|||||||

## Contributing to Ollama4j

|

|

||||||

|

|

||||||

Thanks for your interest in contributing! This guide explains how to set up your environment, make changes, and submit pull requests.

|

|

||||||

|

|

||||||

### Code of Conduct

|

|

||||||

|

|

||||||

By participating, you agree to abide by our [Code of Conduct](CODE_OF_CONDUCT.md).

|

|

||||||

|

|

||||||

### Quick Start

|

|

||||||

|

|

||||||

Prerequisites:

|

|

||||||

|

|

||||||

- Java 11+

|

|

||||||

- Maven 3.8+

|

|

||||||

- Docker (required for integration tests)

|

|

||||||

- Make (for convenience targets)

|

|

||||||

- pre-commit (for Git hooks)

|

|

||||||

|

|

||||||

Setup:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# 1) Fork the repo and clone your fork

|

|

||||||

git clone https://github.com/<your-username>/ollama4j.git

|

|

||||||

cd ollama4j

|

|

||||||

|

|

||||||

# 2) Install and enable git hooks

|

|

||||||

pre-commit install --hook-type pre-commit --hook-type commit-msg

|

|

||||||

|

|

||||||

# 3) Prepare dev environment (installs husk deps/tools if needed)

|

|

||||||

make dev

|

|

||||||

```

|

|

||||||

|

|

||||||

Build and test:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# Build

|

|

||||||

make build

|

|

||||||

|

|

||||||

# Run unit tests

|

|

||||||

make unit-tests

|

|

||||||

|

|

||||||

# Run integration tests (requires Docker running)

|

|

||||||

make integration-tests

|

|

||||||

```

|

|

||||||

|

|

||||||

If you prefer raw Maven:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

# Unit tests profile

|

|

||||||

mvn -P unit-tests clean test

|

|

||||||

|

|

||||||

# Integration tests profile (Docker required)

|

|

||||||

mvn -P integration-tests -DskipUnitTests=true clean verify

|

|

||||||

```

|

|

||||||

|

|

||||||

### Commit Style

|

|

||||||

|

|

||||||

We use Conventional Commits. Commit messages and PR titles should follow:

|

|

||||||

|

|

||||||

```

|

|

||||||

<type>(optional scope): <short summary>

|

|

||||||

|

|

||||||

[optional body]

|

|

||||||

[optional footer(s)]

|

|

||||||

```

|

|

||||||

|

|

||||||

Common types: `feat`, `fix`, `docs`, `refactor`, `test`, `build`, `chore`.

|

|

||||||

|

|

||||||

Commit message formatting is enforced via `commitizen` through `pre-commit` hooks.

|

|

||||||

|

|

||||||

### Pre-commit Hooks

|

|

||||||

|

|

||||||

Before pushing, run:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

pre-commit run -a

|

|

||||||

```

|

|

||||||

|

|

||||||

Hooks will check for merge conflicts, large files, YAML/XML/JSON validity, line endings, and basic formatting. Fix reported issues before opening a PR.

|

|

||||||

|

|

||||||

### Coding Guidelines

|

|

||||||

|

|

||||||

- Target Java 11+; match existing style and formatting.

|

|

||||||

- Prefer clear, descriptive names over abbreviations.

|

|

||||||

- Add Javadoc for public APIs and non-obvious logic.

|

|

||||||

- Include meaningful tests for new features and bug fixes.

|

|

||||||

- Avoid introducing new dependencies without discussion.

|

|

||||||

|

|

||||||

### Tests

|

|

||||||

|

|

||||||

- Unit tests: place under `src/test/java/**/unittests/`.

|

|

||||||

- Integration tests: place under `src/test/java/**/integrationtests/` (uses Testcontainers; ensure Docker is running).

|

|

||||||

|

|

||||||

### Documentation

|

|

||||||

|

|

||||||

- Update `README.md`, Javadoc, and `docs/` when you change public APIs or user-facing behavior.

|

|

||||||

- Add example snippets where useful. Keep API references consistent with the website content when applicable.

|

|

||||||

|

|

||||||

### Pull Requests

|

|

||||||

|

|

||||||

Before opening a PR:

|

|

||||||

|

|

||||||

- Ensure `make build` and all tests pass locally.

|

|

||||||

- Run `pre-commit run -a` and fix any issues.

|

|

||||||

- Keep PRs focused and reasonably small. Link related issues (e.g., "Closes #123").

|

|

||||||

- Describe the change, rationale, and any trade-offs in the PR description.

|

|

||||||

|

|

||||||

Review process:

|

|

||||||

|

|

||||||

- Maintainers will review for correctness, scope, tests, and docs.

|

|

||||||

- You may be asked to iterate; please be responsive to comments.

|

|

||||||

|

|

||||||

### Security

|

|

||||||

|

|

||||||

If you discover a security issue, please do not open a public issue. Instead, email the maintainer at `koujalgi.amith@gmail.com` with details.

|

|

||||||

|

|

||||||

### License

|

|

||||||

|

|

||||||

By contributing, you agree that your contributions will be licensed under the project’s [MIT License](LICENSE).

|

|

||||||

|

|

||||||

### Questions and Discussion

|

|

||||||

|

|

||||||

Have questions or ideas? Open a GitHub Discussion or issue. We welcome feedback and proposals!

|

|

||||||

|

|

||||||

|

|

||||||

2

LICENSE

2

LICENSE

@ -1,6 +1,6 @@

|

|||||||

MIT License

|

MIT License

|

||||||

|

|

||||||

Copyright (c) 2023 Amith Koujalgi and contributors

|

Copyright (c) 2023 Amith Koujalgi

|

||||||

|

|

||||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||||

of this software and associated documentation files (the "Software"), to deal

|

of this software and associated documentation files (the "Software"), to deal

|

||||||

|

|||||||

71

Makefile

71

Makefile

@ -2,74 +2,41 @@ dev:

|

|||||||

@echo "Setting up dev environment..."

|

@echo "Setting up dev environment..."

|

||||||

@command -v pre-commit >/dev/null 2>&1 || { echo "Error: pre-commit is not installed. Please install it first."; exit 1; }

|

@command -v pre-commit >/dev/null 2>&1 || { echo "Error: pre-commit is not installed. Please install it first."; exit 1; }

|

||||||

@command -v docker >/dev/null 2>&1 || { echo "Error: docker is not installed. Please install it first."; exit 1; }

|

@command -v docker >/dev/null 2>&1 || { echo "Error: docker is not installed. Please install it first."; exit 1; }

|

||||||

@pre-commit install

|

pre-commit install

|

||||||

@pre-commit autoupdate

|

pre-commit autoupdate

|

||||||

@pre-commit install --install-hooks

|

pre-commit install --install-hooks

|

||||||

|

|

||||||

check-formatting:

|

build:

|

||||||

@echo "\033[0;34mChecking code formatting...\033[0m"

|

mvn -B clean install -Dgpg.skip=true

|

||||||

@mvn spotless:check

|

|

||||||

|

|

||||||

apply-formatting:

|

full-build:

|

||||||

@echo "\033[0;32mApplying code formatting...\033[0m"

|

mvn -B clean install

|

||||||

@mvn spotless:apply

|

|

||||||

|

|

||||||

build: apply-formatting

|

unit-tests:

|

||||||

@echo "\033[0;34mBuilding project (GPG skipped)...\033[0m"

|

mvn clean test -Punit-tests

|

||||||

@mvn -B clean install -Dgpg.skip=true -Dmaven.javadoc.skip=true

|

|

||||||

|

|

||||||

full-build: apply-formatting

|

integration-tests:

|

||||||

@echo "\033[0;34mPerforming full build...\033[0m"

|

export USE_EXTERNAL_OLLAMA_HOST=false && mvn clean verify -Pintegration-tests

|

||||||

@mvn -B clean install

|

|

||||||

|

|

||||||

unit-tests: apply-formatting

|

integration-tests-remote:

|

||||||

@echo "\033[0;34mRunning unit tests...\033[0m"

|

export USE_EXTERNAL_OLLAMA_HOST=true && export OLLAMA_HOST=http://192.168.29.223:11434 && mvn clean verify -Pintegration-tests -Dgpg.skip=true

|

||||||

@mvn clean test -Punit-tests

|

|

||||||

|

|

||||||

integration-tests-all: apply-formatting

|

|

||||||

@echo "\033[0;34mRunning integration tests (local - all)...\033[0m"

|

|

||||||

@export USE_EXTERNAL_OLLAMA_HOST=false && mvn clean verify -Pintegration-tests

|

|

||||||

|

|

||||||

integration-tests-basic: apply-formatting

|

|

||||||

@echo "\033[0;34mRunning integration tests (local - basic)...\033[0m"

|

|

||||||

@export USE_EXTERNAL_OLLAMA_HOST=false && mvn clean verify -Pintegration-tests -Dit.test=WithAuth

|

|

||||||

|

|

||||||

integration-tests-remote: apply-formatting

|

|

||||||

@echo "\033[0;34mRunning integration tests (remote - all)...\033[0m"

|

|

||||||

@export USE_EXTERNAL_OLLAMA_HOST=true && export OLLAMA_HOST=http://192.168.29.229:11434 && mvn clean verify -Pintegration-tests -Dgpg.skip=true

|

|

||||||

|

|

||||||

doxygen:

|

doxygen:

|

||||||

@echo "\033[0;34mGenerating documentation with Doxygen...\033[0m"

|

doxygen Doxyfile

|

||||||

@doxygen Doxyfile

|

|

||||||

|

|

||||||

javadoc:

|

|

||||||

@echo "\033[0;34mGenerating Javadocs into '$(javadocfolder)'...\033[0m"

|

|

||||||

@mvn clean javadoc:javadoc

|

|

||||||

@if [ -f "target/reports/apidocs/index.html" ]; then \

|

|

||||||

echo "\033[0;32mJavadocs generated in target/reports/apidocs/index.html\033[0m"; \

|

|

||||||

else \

|

|

||||||

echo "\033[0;31mFailed to generate Javadocs in target/reports/apidocs\033[0m"; \

|

|

||||||

exit 1; \

|

|

||||||

fi

|

|

||||||

|

|

||||||

list-releases:

|

list-releases:

|

||||||

@echo "\033[0;34mListing latest releases...\033[0m"

|

curl 'https://central.sonatype.com/api/internal/browse/component/versions?sortField=normalizedVersion&sortDirection=desc&page=0&size=20&filter=namespace%3Aio.github.ollama4j%2Cname%3Aollama4j' \

|

||||||

@curl 'https://central.sonatype.com/api/internal/browse/component/versions?sortField=normalizedVersion&sortDirection=desc&page=0&size=20&filter=namespace%3Aio.github.ollama4j%2Cname%3Aollama4j' \

|

|

||||||

--compressed \

|

--compressed \

|

||||||

--silent | jq -r '.components[].version'

|

--silent | jq -r '.components[].version'

|

||||||

|

|

||||||

docs-build:

|

docs-build:

|

||||||

@echo "\033[0;34mBuilding documentation site...\033[0m"

|

npm i --prefix docs && npm run build --prefix docs

|

||||||

@cd ./docs && npm ci --no-audit --fund=false && npm run build

|

|

||||||

|

|

||||||

docs-serve:

|

docs-serve:

|

||||||

@echo "\033[0;34mServing documentation site...\033[0m"

|

npm i --prefix docs && npm run start --prefix docs

|

||||||

@cd ./docs && npm install && npm run start

|

|

||||||

|

|

||||||

start-cpu:

|

start-cpu:

|

||||||

@echo "\033[0;34mStarting Ollama (CPU mode)...\033[0m"

|

docker run -it -v ~/ollama:/root/.ollama -p 11434:11434 ollama/ollama

|

||||||

@docker run -it -v ~/ollama:/root/.ollama -p 11434:11434 ollama/ollama

|

|

||||||

|

|

||||||

start-gpu:

|

start-gpu:

|

||||||

@echo "\033[0;34mStarting Ollama (GPU mode)...\033[0m"

|

docker run -it --gpus=all -v ~/ollama:/root/.ollama -p 11434:11434 ollama/ollama

|

||||||

@docker run -it --gpus=all -v ~/ollama:/root/.ollama -p 11434:11434 ollama/ollama

|

|

||||||

171

README.md

171

README.md

@ -1,32 +1,26 @@

|

|||||||

<div align="center">

|

|

||||||

<img src='https://raw.githubusercontent.com/ollama4j/ollama4j/refs/heads/main/ollama4j-new.jpeg' width='200' alt="ollama4j-icon">

|

|

||||||

|

|

||||||

### Ollama4j

|

### Ollama4j

|

||||||

|

|

||||||

</div>

|

<p align="center">

|

||||||

|

<img src='https://raw.githubusercontent.com/ollama4j/ollama4j/65a9d526150da8fcd98e2af6a164f055572bf722/ollama4j.jpeg' width='100' alt="ollama4j-icon">

|

||||||

|

</p>

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

A Java library (wrapper/binding) for Ollama server.

|

A Java library (wrapper/binding) for Ollama server.

|

||||||

|

|

||||||

_Find more details on the **[website](https://ollama4j.github.io/ollama4j/)**._

|

Find more details on the [website](https://ollama4j.github.io/ollama4j/).

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

[](https://github.com/ollama4j/ollama4j/actions/workflows/run-tests.yml)

|

|

||||||

|

|

||||||

[](https://codecov.io/gh/ollama4j/ollama4j)

|

|

||||||

</div>

|

|

||||||

|

|

||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

|

|

||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

@ -35,60 +29,32 @@ _Find more details on the **[website](https://ollama4j.github.io/ollama4j/)**._

|

|||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

|

|

||||||

|

|

||||||

|

[](https://codecov.io/gh/ollama4j/ollama4j)

|

||||||

|

|

||||||

|

[](https://github.com/ollama4j/ollama4j/actions/workflows/run-tests.yml)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

</div>

|

||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

|

|

||||||

## Table of Contents

|

## Table of Contents

|

||||||

|

|

||||||

- [Capabilities](#capabilities)

|

|

||||||

- [How does it work?](#how-does-it-work)

|

- [How does it work?](#how-does-it-work)

|

||||||

- [Requirements](#requirements)

|

- [Requirements](#requirements)

|

||||||

- [Usage](#usage)

|

- [Installation](#installation)

|

||||||

- [For Maven](#for-maven)

|

- [API Spec](https://ollama4j.github.io/ollama4j/category/apis---model-management)

|

||||||

- [Using Maven Central](#using-maven-central)

|

|

||||||

- [Using GitHub's Maven Package Repository](#using-githubs-maven-package-repository)

|

|

||||||

- [For Gradle](#for-gradle)

|

|

||||||

- [API Spec](#api-spec)

|

|

||||||

- [Examples](#examples)

|

- [Examples](#examples)

|

||||||

|

- [Javadoc](https://ollama4j.github.io/ollama4j/apidocs/)

|

||||||

- [Development](#development)

|

- [Development](#development)

|

||||||

- [Setup dev environment](#setup-dev-environment)

|

- [Contributions](#get-involved)

|

||||||

- [Build](#build)

|

|

||||||

- [Run unit tests](#run-unit-tests)

|

|

||||||

- [Run integration tests](#run-integration-tests)

|

|

||||||

- [Releases](#releases)

|

|

||||||

- [Get Involved](#get-involved)

|

|

||||||

- [Who's using Ollama4j?](#whos-using-ollama4j)

|

|

||||||

- [Growth](#growth)

|

|

||||||

- [References](#references)

|

- [References](#references)

|

||||||

- [Credits](#credits)

|

|

||||||

- [Appreciate the work?](#appreciate-the-work)

|

|

||||||

|

|

||||||

## Capabilities

|

#### How does it work?

|

||||||

|

|

||||||

- **Text generation**: Single-turn `generate` with optional streaming and advanced options

|

|

||||||

- **Chat**: Multi-turn chat with conversation history and roles

|

|

||||||

- **Tool/function calling**: Built-in tool invocation via annotations and tool specs

|

|

||||||

- **Reasoning/thinking modes**: Generate and chat with “thinking” outputs where supported

|

|

||||||

- **Image inputs (multimodal)**: Generate with images as inputs where models support vision

|

|

||||||

- **Embeddings**: Create vector embeddings for text

|

|

||||||

- **Async generation**: Fire-and-forget style generation APIs

|

|

||||||

- **Custom roles**: Define and use custom chat roles

|

|

||||||

- **Model management**: List, pull, create, delete, and get model details

|

|

||||||

- **Connectivity utilities**: Server `ping` and process status (`ps`)

|

|

||||||

- **Authentication**: Basic auth and bearer token support

|

|

||||||

- **Options builder**: Type-safe builder for model parameters and request options

|

|

||||||

- **Timeouts**: Configure connect/read/write timeouts

|

|

||||||

- **Logging**: Built-in logging hooks for requests and responses

|

|

||||||

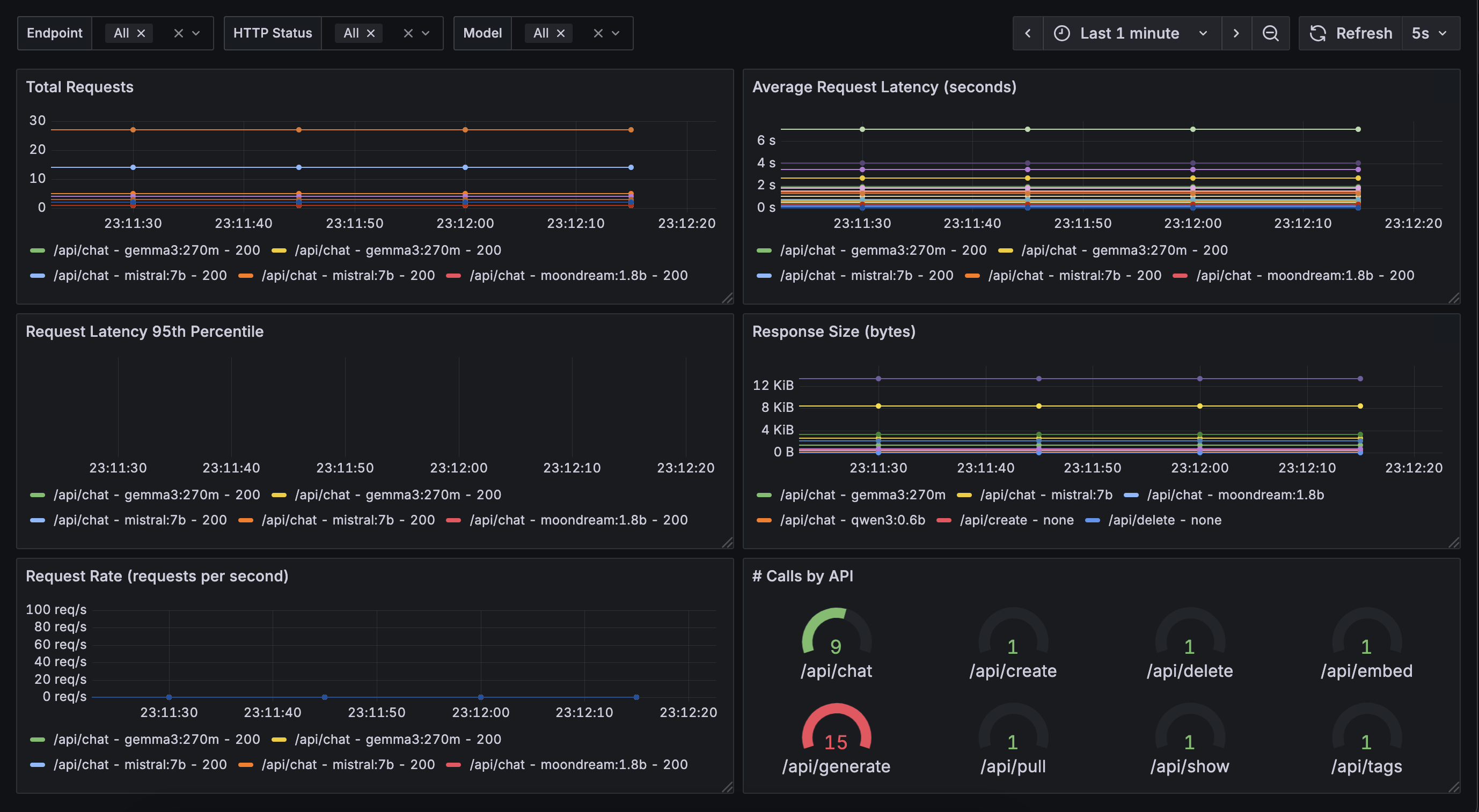

- **Metrics & Monitoring** 🆕: Built-in Prometheus metrics export for real-time monitoring of requests, model usage, and

|

|

||||||

performance. *(Beta feature – feedback/contributions welcome!)* -

|

|

||||||

Checkout [ollama4j-examples](https://github.com/ollama4j/ollama4j-examples) repository for details.

|

|

||||||

|

|

||||||

<div align="center">

|

|

||||||

<img src='metrics.png' width='100%' alt="ollama4j-icon">

|

|

||||||

</div>

|

|

||||||

|

|

||||||

## How does it work?

|

|

||||||

|

|

||||||

```mermaid

|

```mermaid

|

||||||

flowchart LR

|

flowchart LR

|

||||||

@ -102,16 +68,16 @@ _Find more details on the **[website](https://ollama4j.github.io/ollama4j/)**._

|

|||||||

end

|

end

|

||||||

```

|

```

|

||||||

|

|

||||||

## Requirements

|

#### Requirements

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

<p align="center">

|

|

||||||

<img src="https://img.shields.io/badge/Java-11%2B-green.svg?style=for-the-badge&labelColor=gray&label=Java&color=orange" alt="Java"/>

|

|

||||||

<a href="https://ollama.com/" target="_blank">

|

<a href="https://ollama.com/" target="_blank">

|

||||||

<img src="https://img.shields.io/badge/Ollama-0.11.10+-blue.svg?style=for-the-badge&labelColor=gray&label=Ollama&color=blue" alt="Ollama"/>

|

<img src="https://img.shields.io/badge/v0.3.0-green.svg?style=for-the-badge&labelColor=gray&label=Ollama&color=blue" alt=""/>

|

||||||

</a>

|

</a>

|

||||||

</p>

|

|

||||||

|

|

||||||

## Usage

|

## Installation

|

||||||

|

|

||||||

> [!NOTE]

|

> [!NOTE]

|

||||||

> We are now publishing the artifacts to both Maven Central and GitHub package repositories.

|

> We are now publishing the artifacts to both Maven Central and GitHub package repositories.

|

||||||

@ -136,7 +102,7 @@ In your Maven project, add this dependency:

|

|||||||

<dependency>

|

<dependency>

|

||||||

<groupId>io.github.ollama4j</groupId>

|

<groupId>io.github.ollama4j</groupId>

|

||||||

<artifactId>ollama4j</artifactId>

|

<artifactId>ollama4j</artifactId>

|

||||||

<version>1.1.0</version>

|

<version>1.0.100</version>

|

||||||

</dependency>

|

</dependency>

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -192,7 +158,7 @@ In your Maven project, add this dependency:

|

|||||||

<dependency>

|

<dependency>

|

||||||

<groupId>io.github.ollama4j</groupId>

|

<groupId>io.github.ollama4j</groupId>

|

||||||

<artifactId>ollama4j</artifactId>

|

<artifactId>ollama4j</artifactId>

|

||||||

<version>1.1.0</version>

|

<version>1.0.100</version>

|

||||||

</dependency>

|

</dependency>

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -202,7 +168,7 @@ In your Maven project, add this dependency:

|

|||||||

|

|

||||||

```groovy

|

```groovy

|

||||||

dependencies {

|

dependencies {

|

||||||

implementation 'io.github.ollama4j:ollama4j:1.1.0'

|

implementation 'io.github.ollama4j:ollama4j:1.0.100'

|

||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -220,17 +186,12 @@ dependencies {

|

|||||||

|

|

||||||

[lib-shield]: https://img.shields.io/badge/ollama4j-get_latest_version-blue.svg?style=just-the-message&labelColor=gray

|

[lib-shield]: https://img.shields.io/badge/ollama4j-get_latest_version-blue.svg?style=just-the-message&labelColor=gray

|

||||||

|

|

||||||

### API Spec

|

#### API Spec

|

||||||

|

|

||||||

> [!TIP]

|

> [!TIP]

|

||||||

> Find the full API specifications on the [website](https://ollama4j.github.io/ollama4j/).

|

> Find the full API specifications on the [website](https://ollama4j.github.io/ollama4j/).

|

||||||

|

|

||||||

## Examples

|

### Development

|

||||||

|

|

||||||

For practical examples and usage patterns of the Ollama4j library, check out

|

|

||||||

the [ollama4j-examples](https://github.com/ollama4j/ollama4j-examples) repository.

|

|

||||||

|

|

||||||

## Development

|

|

||||||

|

|

||||||

Make sure you have `pre-commit` installed.

|

Make sure you have `pre-commit` installed.

|

||||||

|

|

||||||

@ -249,9 +210,7 @@ pip install pre-commit

|

|||||||

#### Setup dev environment

|

#### Setup dev environment

|

||||||

|

|

||||||

> **Note**

|

> **Note**

|

||||||

> If you're on Windows, install [Chocolatey Package Manager for Windows](https://chocolatey.org/install) and then

|

> If you're on Windows, install [Chocolatey Package Manager for Windows](https://chocolatey.org/install) and then install `make` by running `choco install make`. Just a little tip - run the command with administrator privileges if installation faiils.

|

||||||

> install `make` by running `choco install make`. Just a little tip - run the command with administrator privileges if

|

|

||||||

> installation faiils.

|

|

||||||

|

|

||||||

```shell

|

```shell

|

||||||

make dev

|

make dev

|

||||||

@ -282,24 +241,14 @@ make integration-tests

|

|||||||

|

|

||||||

Newer artifacts are published via GitHub Actions CI workflow when a new release is created from `main` branch.

|

Newer artifacts are published via GitHub Actions CI workflow when a new release is created from `main` branch.

|

||||||

|

|

||||||

## Get Involved

|

## Examples

|

||||||

|

|

||||||

<div align="center">

|

The `ollama4j-examples` repository contains examples for using the Ollama4j library. You can explore

|

||||||

|

it [here](https://github.com/ollama4j/ollama4j-examples).

|

||||||

|

|

||||||

<a href=""></a>

|

## ⭐ Give us a Star!

|

||||||

<a href=""></a>

|

|

||||||

<a href=""></a>

|

|

||||||

<a href=""></a>

|

|

||||||

<a href=""></a>

|

|

||||||

|

|

||||||

</div>

|

If you like or are using this project to build your own, please give us a star. It's a free way to show your support.

|

||||||

|

|

||||||

Contributions are most welcome! Whether it's reporting a bug, proposing an enhancement, or helping

|

|

||||||

with code - any sort of contribution is much appreciated.

|

|

||||||

|

|

||||||

<div style="font-size: 15px; font-weight: bold; padding-top: 10px; padding-bottom: 10px; border: 1px solid" align="center">

|

|

||||||

If you like or are use this project, please give us a ⭐. It's a free way to show your support.

|

|

||||||

</div>

|

|

||||||

|

|

||||||

## Who's using Ollama4j?

|

## Who's using Ollama4j?

|

||||||

|

|

||||||

@ -316,18 +265,23 @@ If you like or are use this project, please give us a ⭐. It's a free way to sh

|

|||||||

| 9 | moqui-wechat | A moqui-wechat component | [GitHub](https://github.com/heguangyong/moqui-wechat) |

|

| 9 | moqui-wechat | A moqui-wechat component | [GitHub](https://github.com/heguangyong/moqui-wechat) |

|

||||||

| 10 | B4X | A set of simple and powerful RAD tool for Desktop and Server development | [Website](https://www.b4x.com/android/forum/threads/ollama4j-library-pnd_ollama4j-your-local-offline-llm-like-chatgpt.165003/) |

|

| 10 | B4X | A set of simple and powerful RAD tool for Desktop and Server development | [Website](https://www.b4x.com/android/forum/threads/ollama4j-library-pnd_ollama4j-your-local-offline-llm-like-chatgpt.165003/) |

|

||||||

| 11 | Research Article | Article: `Large language model based mutations in genetic improvement` - published on National Library of Medicine (National Center for Biotechnology Information) | [Website](https://pmc.ncbi.nlm.nih.gov/articles/PMC11750896/) |

|

| 11 | Research Article | Article: `Large language model based mutations in genetic improvement` - published on National Library of Medicine (National Center for Biotechnology Information) | [Website](https://pmc.ncbi.nlm.nih.gov/articles/PMC11750896/) |

|

||||||

| 12 | renaime | A LLaVa powered tool that automatically renames image files having messy file names. | [Website](https://devpost.com/software/renaime) |

|

|

||||||

|

|

||||||

## Growth

|

## Traction

|

||||||

|

|

||||||

|

[](https://star-history.com/#ollama4j/ollama4j&Date)

|

||||||

|

|

||||||

|

## Get Involved

|

||||||

|

|

||||||

|

<div align="center">

|

||||||

|

|

||||||

|

<a href=""></a>

|

||||||

|

<a href=""></a>

|

||||||

|

<a href=""></a>

|

||||||

|

<a href=""></a>

|

||||||

|

<a href=""></a>

|

||||||

|

|

||||||

|

</div>

|

||||||

|

|

||||||

<p align="center">

|

|

||||||

<a href="https://star-history.com/#ollama4j/ollama4j&Date" target="_blank" rel="noopener noreferrer">

|

|

||||||

<img

|

|

||||||

src="https://api.star-history.com/svg?repos=ollama4j/ollama4j&type=Date"

|

|

||||||

alt="Star History Chart"

|

|

||||||

/>

|

|

||||||

</a>

|

|

||||||

</p>

|

|

||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

|

|

||||||

@ -339,6 +293,27 @@ If you like or are use this project, please give us a ⭐. It's a free way to sh

|

|||||||

|

|

||||||

[//]: # ()

|

[//]: # ()

|

||||||

|

|

||||||

|

|

||||||

|

Contributions are most welcome! Whether it's reporting a bug, proposing an enhancement, or helping

|

||||||

|

with code - any sort

|

||||||

|

of contribution is much appreciated.

|

||||||

|

|

||||||

|

## 🏷️ License and Citation

|

||||||

|

|

||||||

|

The code is available under [MIT License](./LICENSE).

|

||||||

|

|

||||||

|

If you find this project helpful in your research, please cite this work at

|

||||||

|

|

||||||

|

```

|

||||||

|

@misc{ollama4j2024,

|

||||||

|

author = {Amith Koujalgi},

|

||||||

|

title = {Ollama4j: A Java Library (Wrapper/Binding) for Ollama Server},

|

||||||

|

year = {2024},

|

||||||

|

month = {January},

|

||||||

|

url = {https://github.com/ollama4j/ollama4j}

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

### References

|

### References

|

||||||

|

|

||||||

- [Ollama REST APIs](https://github.com/jmorganca/ollama/blob/main/docs/api.md)

|

- [Ollama REST APIs](https://github.com/jmorganca/ollama/blob/main/docs/api.md)

|

||||||

|

|||||||

39

SECURITY.md

39

SECURITY.md

@ -1,39 +0,0 @@

|

|||||||

## Security Policy

|

|

||||||

|

|

||||||

### Supported Versions

|

|

||||||

|

|

||||||

We aim to support the latest released version of `ollama4j` and the most recent minor version prior to it. Older versions may receive fixes on a best-effort basis.

|

|

||||||

|

|

||||||

### Reporting a Vulnerability

|

|

||||||

|

|

||||||

Please do not open public GitHub issues for security vulnerabilities.

|

|

||||||

|

|

||||||

Instead, email the maintainer at:

|

|

||||||

|

|

||||||

```

|

|

||||||

koujalgi.amith@gmail.com

|

|

||||||

```

|

|

||||||

|

|

||||||

Include as much detail as possible:

|

|

||||||

|

|

||||||

- A clear description of the issue and impact

|

|

||||||

- Steps to reproduce or proof-of-concept

|

|

||||||

- Affected version(s) and environment

|

|

||||||

- Any suggested mitigations or patches

|

|

||||||

|

|

||||||

You should receive an acknowledgement within 72 hours. We will work with you to validate the issue, determine severity, and prepare a fix.

|

|

||||||

|

|

||||||

### Disclosure

|

|

||||||

|

|

||||||

We follow a responsible disclosure process:

|

|

||||||

|

|

||||||

1. Receive and validate report privately.

|

|

||||||

2. Develop and test a fix.

|

|

||||||

3. Coordinate a release that includes the fix.

|

|

||||||

4. Publicly credit the reporter (if desired) in release notes.

|

|

||||||

|

|

||||||

### GPG Signatures

|

|

||||||

|

|

||||||

Releases may be signed as part of our CI pipeline. If verification fails or you have concerns about release integrity, please contact us via the email above.

|

|

||||||

|

|

||||||

|

|

||||||

186

docs/METRICS.md

186

docs/METRICS.md

@ -1,186 +0,0 @@

|

|||||||

# Prometheus Metrics Integration

|

|

||||||

|

|

||||||

Ollama4j now includes comprehensive Prometheus metrics collection to help you monitor and observe your Ollama API usage. This feature allows you to track request counts, response times, model usage, and other operational metrics.

|

|

||||||

|

|

||||||

## Features

|

|

||||||

|

|

||||||

The metrics integration provides the following metrics:

|

|

||||||

|

|

||||||

- **Request Metrics**: Total requests, duration histograms, and response time summaries by endpoint

|

|

||||||

- **Model Usage**: Model-specific usage statistics and response times

|

|

||||||

- **Token Generation**: Token count tracking per model

|

|

||||||

- **Error Tracking**: Error counts by type and endpoint

|

|

||||||

- **Active Connections**: Current number of active API connections

|

|

||||||

|

|

||||||

## Quick Start

|

|

||||||

|

|

||||||

### 1. Enable Metrics Collection

|

|

||||||

|

|

||||||

```java

|

|

||||||

import io.github.ollama4j.Ollama;

|

|

||||||

|

|

||||||

// Create API instance with metrics enabled

|

|

||||||

Ollama ollama = new Ollama();

|

|

||||||

ollamaAPI.

|

|

||||||

|

|

||||||

setMetricsEnabled(true);

|

|

||||||

```

|

|

||||||

|

|

||||||

### 2. Start Metrics Server

|

|

||||||

|

|

||||||

```java

|

|

||||||

import io.prometheus.client.exporter.HTTPServer;

|

|

||||||

|

|

||||||

// Start Prometheus metrics HTTP server on port 8080

|

|

||||||

HTTPServer metricsServer = new HTTPServer(8080);

|

|

||||||

System.out.println("Metrics available at: http://localhost:8080/metrics");

|

|

||||||

```

|

|

||||||

|

|

||||||

### 3. Use the API (Metrics are automatically collected)

|

|

||||||

|

|

||||||

```java

|

|

||||||

// All API calls are automatically instrumented

|

|

||||||

boolean isReachable = ollama.ping();

|

|

||||||

|

|

||||||

Map<String, Object> format = new HashMap<>();

|

|

||||||

format.put("type", "json");

|

|

||||||

OllamaResult result = ollama.generateWithFormat(

|

|

||||||

"llama2",

|

|

||||||

"Generate a JSON object",

|

|

||||||

format

|

|

||||||

);

|

|

||||||

```

|

|

||||||

|

|

||||||

## Available Metrics

|

|

||||||

|

|

||||||

### Request Metrics

|

|

||||||

|

|

||||||

- `ollama_api_requests_total` - Total number of API requests by endpoint, method, and status

|

|

||||||

- `ollama_api_request_duration_seconds` - Request duration histogram by endpoint and method

|

|

||||||

- `ollama_api_response_time_seconds` - Response time summary with percentiles

|

|

||||||

|

|

||||||

### Model Metrics

|

|

||||||

|

|

||||||

- `ollama_model_usage_total` - Model usage count by model name and operation

|

|

||||||

- `ollama_model_response_time_seconds` - Model response time histogram

|

|

||||||

- `ollama_tokens_generated_total` - Total tokens generated by model

|

|

||||||

|

|

||||||

### System Metrics

|

|

||||||

|

|

||||||

- `ollama_api_active_connections` - Current number of active connections

|

|

||||||

- `ollama_api_errors_total` - Error count by endpoint and error type

|

|

||||||

|

|

||||||

## Example Metrics Output

|

|

||||||

|

|

||||||

```

|

|

||||||

# HELP ollama_api_requests_total Total number of Ollama API requests

|

|

||||||

# TYPE ollama_api_requests_total counter

|

|

||||||

ollama_api_requests_total{endpoint="/api/generate",method="POST",status="success"} 5.0

|

|

||||||

ollama_api_requests_total{endpoint="/api/embed",method="POST",status="success"} 3.0

|

|

||||||

|

|

||||||

# HELP ollama_api_request_duration_seconds Duration of Ollama API requests in seconds

|

|

||||||

# TYPE ollama_api_request_duration_seconds histogram

|

|

||||||

ollama_api_request_duration_seconds_bucket{endpoint="/api/generate",method="POST",le="0.1"} 0.0

|

|

||||||

ollama_api_request_duration_seconds_bucket{endpoint="/api/generate",method="POST",le="0.5"} 2.0

|

|

||||||

ollama_api_request_duration_seconds_bucket{endpoint="/api/generate",method="POST",le="1.0"} 4.0

|

|

||||||

ollama_api_request_duration_seconds_bucket{endpoint="/api/generate",method="POST",le="+Inf"} 5.0

|

|

||||||

ollama_api_request_duration_seconds_sum{endpoint="/api/generate",method="POST"} 2.5

|

|

||||||

ollama_api_request_duration_seconds_count{endpoint="/api/generate",method="POST"} 5.0

|

|

||||||

|

|

||||||

# HELP ollama_model_usage_total Total number of model usage requests

|

|

||||||

# TYPE ollama_model_usage_total counter

|

|

||||||

ollama_model_usage_total{model_name="llama2",operation="generate_with_format"} 5.0

|

|

||||||

ollama_model_usage_total{model_name="llama2",operation="embed"} 3.0

|

|

||||||

|

|

||||||

# HELP ollama_tokens_generated_total Total number of tokens generated

|

|

||||||

# TYPE ollama_tokens_generated_total counter

|

|

||||||

ollama_tokens_generated_total{model_name="llama2"} 150.0

|

|

||||||

```

|

|

||||||

|

|

||||||

## Configuration

|

|

||||||

|

|

||||||

### Enable/Disable Metrics

|

|

||||||

|

|

||||||

```java

|

|

||||||

OllamaAPI ollama = new OllamaAPI();

|

|

||||||

|

|

||||||

// Enable metrics collection

|

|

||||||

ollama.setMetricsEnabled(true);

|

|

||||||

|

|

||||||

// Disable metrics collection (default)

|

|

||||||

ollama.setMetricsEnabled(false);

|

|

||||||

```

|

|

||||||

|

|

||||||

### Custom Metrics Server

|

|

||||||

|

|

||||||

```java

|

|

||||||

import io.prometheus.client.exporter.HTTPServer;

|

|

||||||

|

|

||||||

// Start on custom port

|

|

||||||

HTTPServer metricsServer = new HTTPServer(9090);

|

|

||||||

|

|

||||||

// Start on custom host and port

|

|

||||||

HTTPServer metricsServer = new HTTPServer("0.0.0.0", 9090);

|

|

||||||

```

|

|

||||||

|

|

||||||

## Integration with Prometheus

|

|

||||||

|

|

||||||

### Prometheus Configuration

|

|

||||||

|

|

||||||

Add this to your `prometheus.yml`:

|

|

||||||

|

|

||||||

```yaml

|

|

||||||

scrape_configs:

|

|

||||||